- 🔬 Data Science

- 🥠 Deep Learning and pixel-based classification

Introduction

Road network is a required layer in a lot of mapping exercises, for example in Basemap preparation (critical for navigation), humanitarian aid, disaster management, transportation, and for a lot of other applications it is a critical component.

This sample shows how ArcGIS API for Python can be used to train a deep learning model (Multi-Task Road Extractor model) to extract the road network from satellite imagery. The models trained can be used with ArcGIS Pro or ArcGIS Enterprise and even support distributed processing for quick results.

Further details on the Multi-Task Road Extractor implementation in the API (working principle, architecture, best practices, etc.), can be found in the Guide, along with instructions on how to set up the Python environment.

Before proceeding through this notebook, it is advised to go through the API Reference for Multi-Task Road Extractor (prepare_data(), MultiTaskRoadExtractor()). It will help in understanding the Multi-Task Road Extractor's workflow in detail.

Objectives:

- Classify roads, utilizing API's Multi-Task Road Extractor model.

Area of Interest and data pre-processing

For this sample, we will be using a subset of the publically available SpaceNet dataset. Vector labels as 'road centerlines' are available for download along with imagery, hosted on AWS S3 [1].

The area of interest is Paris, with 425 km of 'road centerline' length (As shown in Figure. 1). Both of these inputs, Imagery, and vector layer (for creating image chips and labels as 'classified tiles') are used to create data that is needed for model training.

Figure 1: SpaceNet dataset - AOI 3 - Paris

Downloaded data has 4 types of imagery: Multispectral, Pan, Pan-sharpened Multispectral, Pan-sharpened RGB. 8-bit RGB imagery support and 16-bit RGB imagery experimental support is available with Multi-Task Road Extractor Model (Multispectral imagery will be supported in the subsequent release). In this sample, Pan-sharpened RGB is used, after converting it to 8-bit imagery.

Pre-processing steps:

-

Downloaded vector labels, in

.geojsonformat, are converted tofeature class/shapefile. (Refer to ArcGIS Pro's JSON To Features GP tool.) -

The converted vector data is checked and repaired if any invalid geometry is found. (Refer to ArcGIS Pro's Repair Geometry GP tool.)

-

'Stretch function' is used to convert 16-bit imagery to 8-bit imagery. (Refer to ArcGIS Pro's Stretch raster function.)

-

'Projected coordinate system' is applied to imagery and road vector data, for ease in the interpretation of results and setting the values of tool parameters.

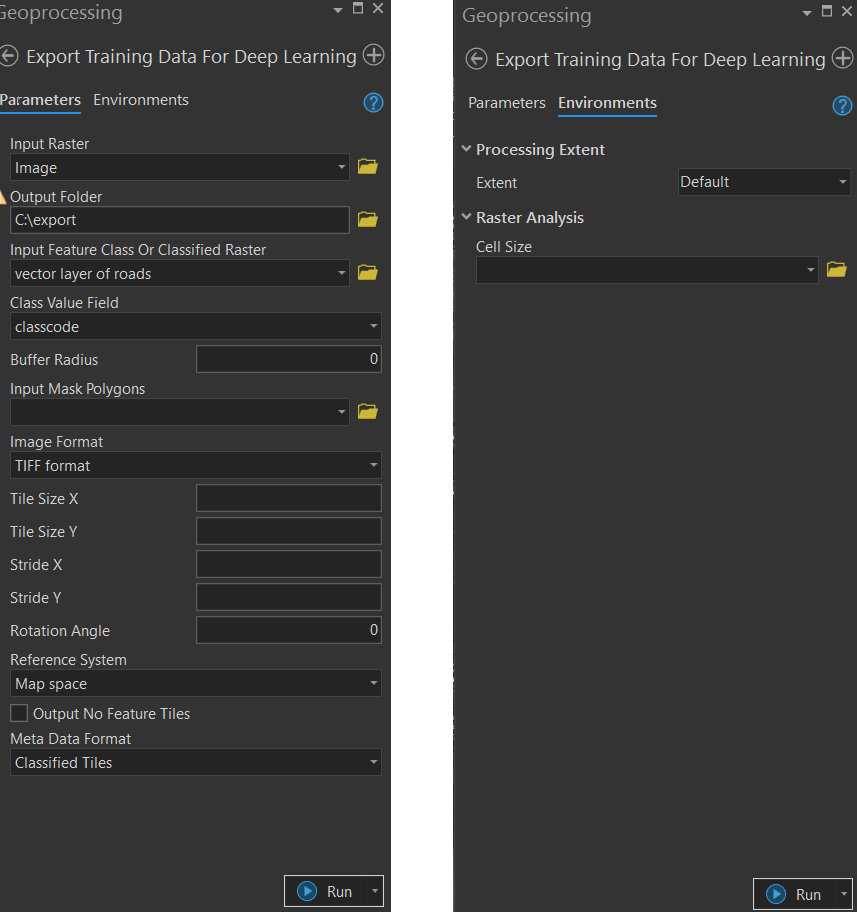

Now, the data is ready for Export Training Data For Deep Learning GP tool (As shown in Figure. 2). It is used to export data that will be needed for model training. This tool is available in ArcGIS Pro as well as ArcGIS Enterprise.

Here, we exported the data in 'Classified Tiles' format using a Cell Size of '30 cm'. Tile Size X and Tile Size Y are set to '512', while Stride X and Stride Y are set to '128'. If Road centerlines are directly used as an input, then based on the area of interest and types of roads in that region, the appropriate buffer size can be set. Alternatively, ArcGIS Pro's Create Buffers GP tool can be used to convert road centerlines to road polygons and buffer value can be decided iteratively by checking the results of the Create Buffers GP tool.

Figure 2: Export Training Data For Deep Learning GP tool

This tool will create all the necessary files needed in the next step, at the Output Folder's directory.

Data preparation

Imports:

from arcgis.learn import prepare_data, MultiTaskRoadExtractorPreparing the exported data:

Some of the frequently used parameters that can be passed in prepare_data() are described below:

path: the path of the folder containing training data. (Output generated by the "Export Training data for deep learning GP tool")

chip_size: Images are cropped to the specified chip_size.

batch_size: No. of images your model will train on each step inside an epoch, it directly depends on the memory of your graphic card.

val_split_pct: Percentage of training data to keep as validation.

resize_to: Resize the cropped image to the mentioned size.

Note: Data meant for 'Try it Live' is a very small subset of the actual data that was used for this sample notebook, so the training time, accuracy, visualization, etc. will change, from what is depicted below.

import os, zipfile

from pathlib import Path

from arcgis.gis import GIS

gis = GIS('home')training_data = gis.content.get('b7bbf2f5f4184960890afeabbdb51a32')

training_datafilepath = training_data.download(file_name=training_data.name)with zipfile.ZipFile(filepath, 'r') as zip_ref:

zip_ref.extractall(Path(filepath).parent)output_path = Path(os.path.join(os.path.splitext(filepath)[0]))data = prepare_data(output_path, chip_size=512, batch_size=4)data.classesVisualization of prepared data

show_batch() can be used to show the prepared data. Where input imagery is shown with labels overlayed on them.

alpha is used to control the transparency of labels.

data.show_batch(alpha=1)

Training the model

First, the Multi-Task Road Extractor model object is created, utilizing the prepared data. Some model-specific advance parameters can be set at this stage.

All of these parameters are optional, as smart 'default values' are already set, which works best in most cases.

The advance parameters are described below:

-

gaussian_thresh: sets the gaussian threshold which allows setting the required road width. -

orient_bin_size: sets the bin size for orientation angles. -

orient_theta: sets the width of the orientation mask. -

mtl_model: It defines two different architectures used to train the Multi-Task Extractor. Values are "linknet" and "hourglass".

While, backbones only work with 'linknet' architecture. ('resnet18', 'resnet34', 'resnet50', 'resnet101', 'resnet152' are the supported backbones.)

model = MultiTaskRoadExtractor(data, mtl_model="hourglass")Next, lr_find() function is used to find the optimal learning rate. It controls the rate at which existing information will be overwritten by newly acquired information throughout the training process. If no value is specified, the optimal learning rate will be extracted from the learning curve during the training process.

model.lr_find()

0.0005754399373371565

fit() is used to train the model, where a new 'optimum learning rate' is automatically computed or the previously computed optimum learning rate can be passed. (Any other user-defined learning rate can also be passed)

If early_stopping is True, then the model training will stop when the model is no longer improving, regardless of the epochs parameter value specified. While an 'epoch' means the dataset will be passed forward and backward through the neural network one time.

miou and dice are the performance metrics, shown after completion of each epoch.

model.fit(50, 0.0005754399373371565, early_stopping=True)| epoch | train_loss | valid_loss | accuracy | miou | dice | time |

|---|---|---|---|---|---|---|

| 0 | 0.548448 | 0.539585 | 0.955007 | 0.813954 | 0.742421 | 1:36:12 |

| 1 | 0.388270 | 0.398783 | 0.964153 | 0.844706 | 0.781793 | 1:35:36 |

| 2 | 0.266606 | 0.292986 | 0.975121 | 0.891181 | 0.854522 | 1:35:52 |

| 3 | 0.221755 | 0.238013 | 0.981758 | 0.920282 | 0.893703 | 1:35:08 |

| 4 | 0.183346 | 0.205614 | 0.985037 | 0.933706 | 0.909582 | 1:35:14 |

| 5 | 0.157644 | 0.177976 | 0.987664 | 0.945289 | 0.925815 | 1:36:11 |

| 6 | 0.142403 | 0.179048 | 0.986943 | 0.941960 | 0.917824 | 1:36:39 |

| 7 | 0.125207 | 0.175523 | 0.987328 | 0.943237 | 0.917360 | 1:35:14 |

| 8 | 0.117216 | 0.149206 | 0.989932 | 0.954738 | 0.934074 | 1:36:27 |

| 9 | 0.118672 | 0.152716 | 0.988726 | 0.949733 | 0.931475 | 1:36:10 |

| 10 | 0.117524 | 0.141266 | 0.989973 | 0.955045 | 0.939116 | 1:35:23 |

| 11 | 0.102923 | 0.180505 | 0.985566 | 0.934490 | 0.889606 | 1:35:42 |

| 12 | 0.098052 | 0.133017 | 0.990930 | 0.959037 | 0.939222 | 1:36:56 |

| 13 | 0.084868 | 0.128443 | 0.991264 | 0.960469 | 0.938061 | 1:35:47 |

| 14 | 0.085274 | 0.135533 | 0.990642 | 0.957780 | 0.939627 | 1:36:54 |

| 15 | 0.079469 | 0.118889 | 0.992281 | 0.964931 | 0.948084 | 1:37:21 |

| 16 | 0.080457 | 0.117053 | 0.992530 | 0.966209 | 0.949776 | 1:36:09 |

| 17 | 0.079043 | 0.126219 | 0.991405 | 0.960909 | 0.945418 | 1:36:08 |

| 18 | 0.076621 | 0.118567 | 0.992838 | 0.967450 | 0.954610 | 1:36:14 |

| 19 | 0.082820 | 0.131838 | 0.989965 | 0.954687 | 0.933912 | 1:36:32 |

| 20 | 0.072295 | 0.114415 | 0.992959 | 0.968146 | 0.955399 | 1:36:26 |

Epoch 21: early stopping

Visualization of results

show_results() is used to visualize the results of the model, for the same scene with the ground truth.

Validation data is used for this.

-

1st column is the 'ground truth image' overlayed with its corresponding 'ground truth labels'.

-

2nd column is the 'ground truth image' overlayed with its corresponding 'predicted labels'.

model.show_results(rows=4)

Saving the trained model

The last step, related to training, is saving the model using save(). Here apart from model files, performance metrics, a graph of validation and training losses, sample results, etc are also saved.

model.save('road_model_for_spacenet_data')WindowsPath('models/road_model_for_spacenet_data')Inference using the trained model, in ArcGIS Pro

The model saved in the previous step can be used to extract a classified raster using Classify Pixels Using Deep Learning tool (As shown in Figure. 3).

Further, the classified raster can be converted into a vector road layer in ArcGIS Pro. The regularisation related GP tools can be used to remove unwanted artifacts in the output. As the model was trained on a Cell Size of '30 cm', at this step too, the Cell Size is kept equal to '30 cm'.

Figure 3: Classify Pixels Using Deep Learning tool

Conclusion

This notebook has summarized the end-to-end workflow for the training of a deep learning model for road classification. This type of model can predict the roads occluded by small and medium length shadows, however when roads have larger occlusions from clouds/shadows then it is unable to create connected road networks.

References

- The SpaceNet dataset used in this sample notebook is licensed under the Creative Commons Attribution-ShareAlike 4.0 International License.