- 🔬 Data Science

- 🥠 Deep Learning and Instance Segmentation

Building footprints are often used for base map preparation, humanitarian aid, disaster management, and transportation planning

There are several ways of generating building footprints. These include manual digitization by using tools to draw outline of each building. However, it is a labor intensive and time consuming process.

This sample shows how ArcGIS API for Python can be used to train a deep learning model to extract building footprints using satellite images. The trained model can be deployed on ArcGIS Pro or ArcGIS Enterprise to extract building footprints.

In this workflow, we will basically have three steps.

- Export Training Data

- Train a Model

- Deploy Model and Extract Footprints

Export training data for deep learning

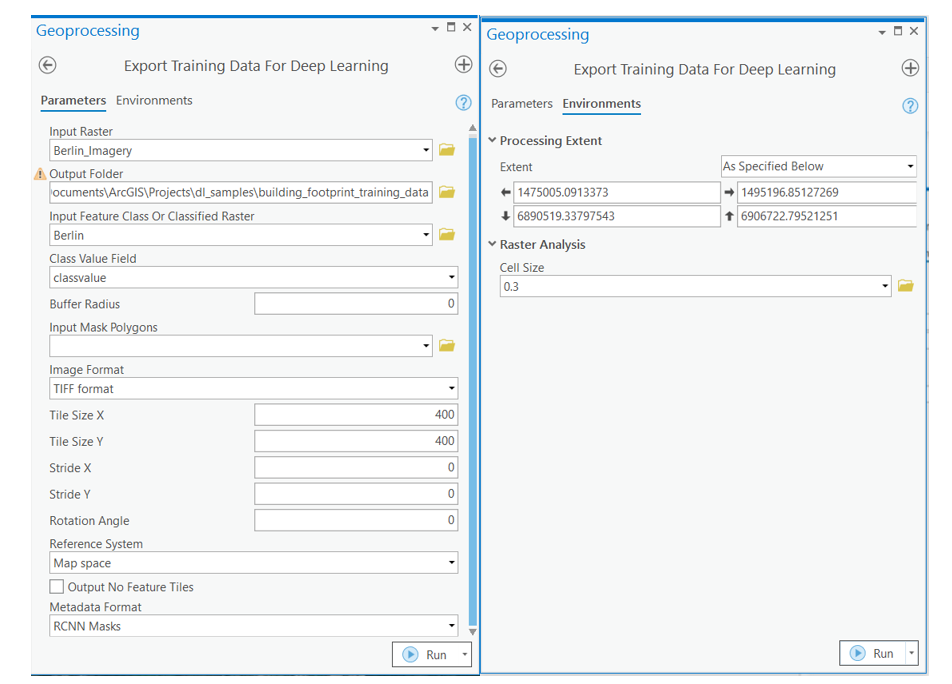

Training data can be exported using the Export Training Data For Deep Learning tool available in ArcGIS Pro as well as ArcGIS Enterprise. For this example we prepared training data in 'RCNN Masks' format using a chip_size of 400px and cell_size of 30cm in ArcGIS Pro.

from arcgis.gis import GIS

gis = GIS('home')

portal = GIS('https://pythonapi.playground.esri.com/portal')The items below are high resolution satellite imagery and a feature layer of building footprints for Berlin which will be used for exporting training data.

berlin_imagery = portal.content.get('e971098d744342058e71f813948bfc00')

berlin_imageryrcnn_labelled_data = gis.content.get('7d3f633a325f4dcf962c82284098ce9d')

rcnn_labelled_dataYou can use the Export Training Data for Deep Learning tool to export training samples for training the model. For this sample, choose RCNN Masks as the export format.

arcpy.ia.ExportTrainingDataForDeepLearning("Berlin_Imagery", r"D:\data\maskrcnn_training_data_maskrcnn_400px_30cm", "berlin_building_footprints", "TIFF", 400, 400, 0, 0, "ONLY_TILES_WITH_FEATURES", "RCNN_Masks", 0, "classvalue", 0, None, 0, "MAP_SPACE", "PROCESS_AS_MOSAICKED_IMAGE", "NO_BLACKEN", "FIXED_SIZE")

This will create all the necessary files needed for the next step in the specified 'Output Folder'. These files serve as our training data.

Model training

This step would be done using jupyter notebook and documentation is available here to install and setup environment.

Necessary Imports

import os

from pathlib import Path

from arcgis.learn import MaskRCNN, prepare_data

from arcgis.gis import GISprepare_data function takes path to training data and creates a fastai databunch with specified transformation, batch size, split percentage, etc.

training_data = gis.content.get('637825446a3641c2b602ee854776ed47')

training_datafilepath = training_data.download(file_name=training_data.name)import zipfile

with zipfile.ZipFile(filepath, 'r') as zip_ref:

zip_ref.extractall(Path(filepath).parent)data_path = Path(os.path.join(os.path.splitext(filepath)[0]))data = prepare_data(data_path,

batch_size=4,

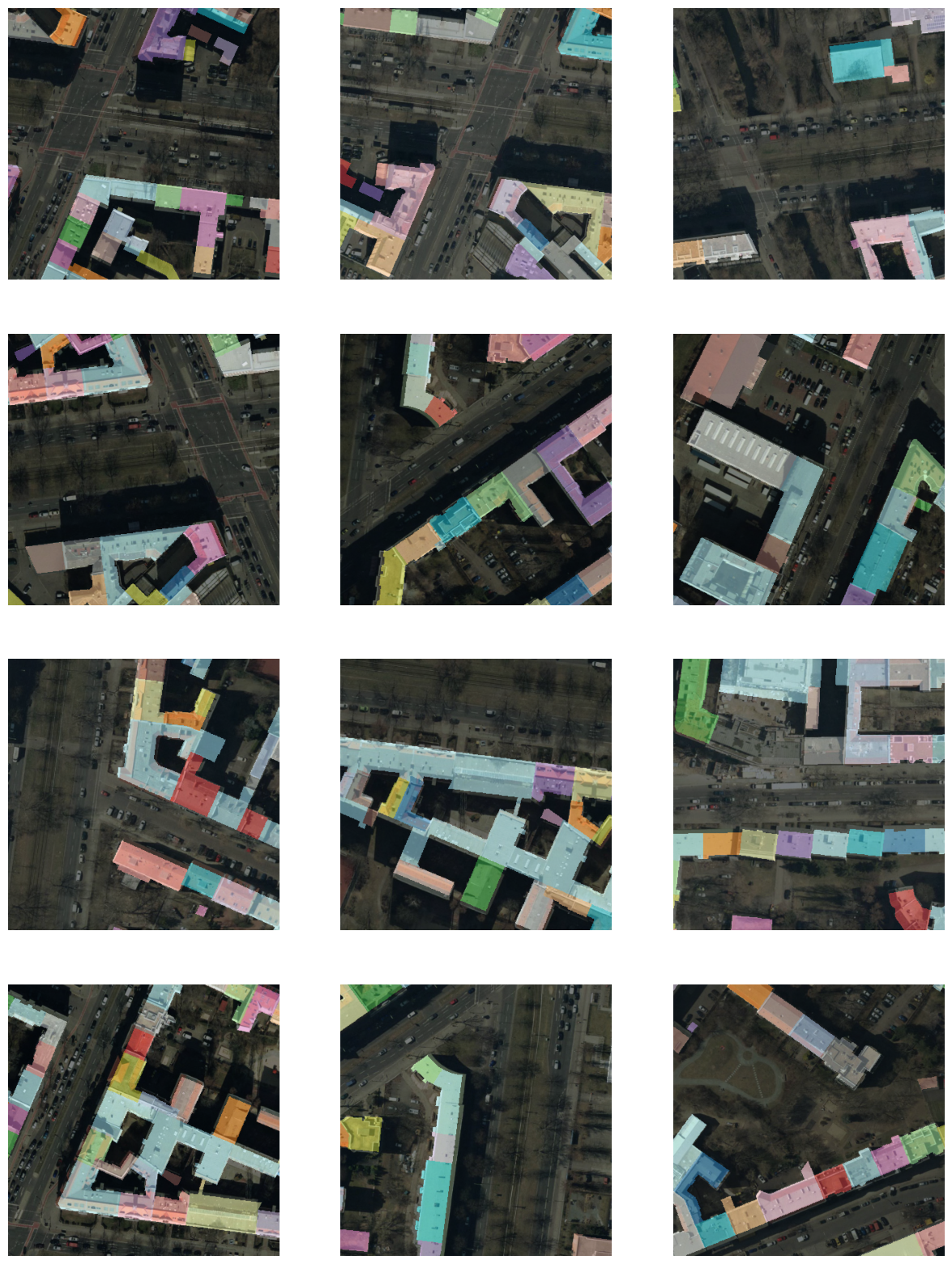

chip_size=400)Visualize training data

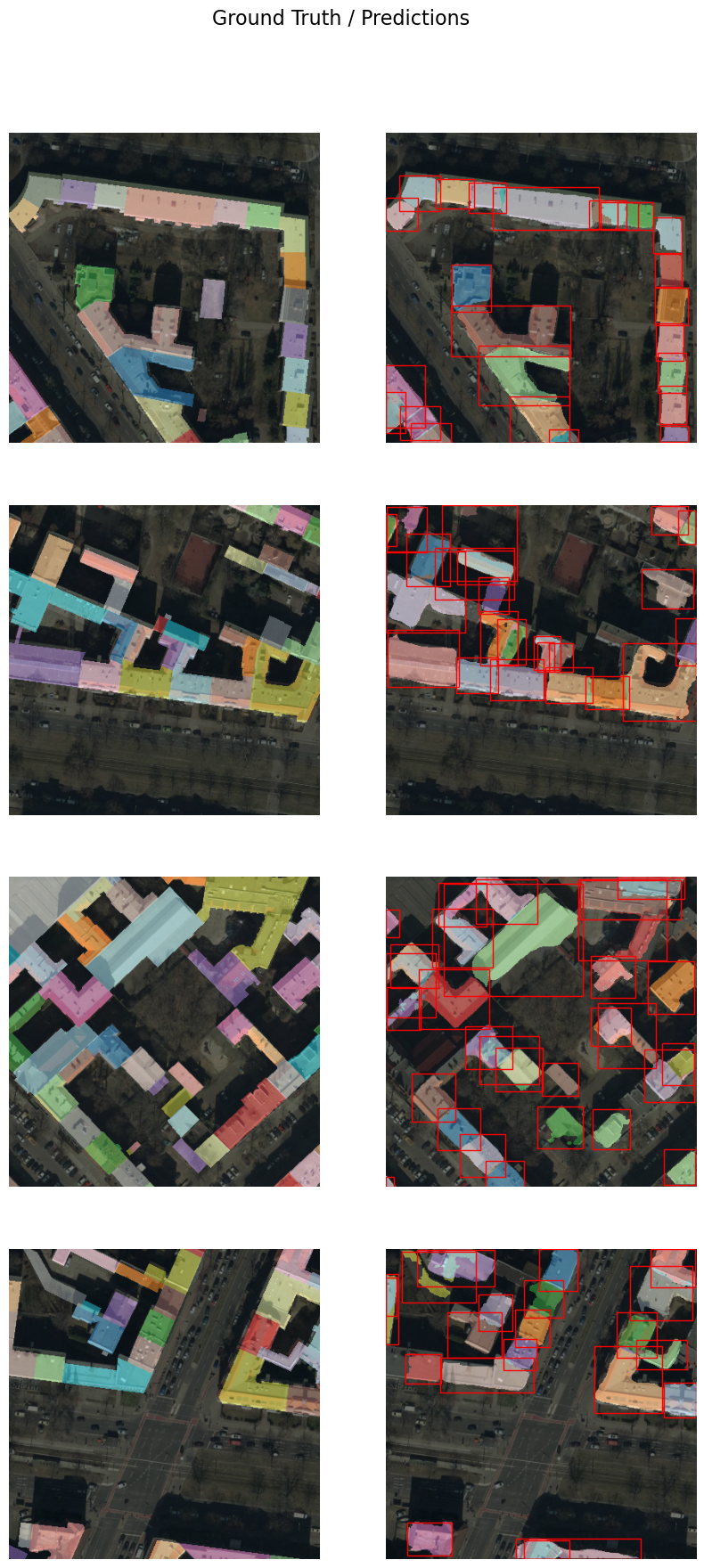

To get a sense of what the training data looks like, use the show_batch() method to randomly pick a few training chips and visualize them. The chips are overlaid with masks representing the building footprints in each image chip.

data.show_batch(rows=4)

Load model architecture

arcgis.learn provides the MaskRCNN model for instance segmentation tasks, which is based on a pretrained convnet, like ResNet that acts as the 'backbone'. More details about MaskRCNN can be found here.

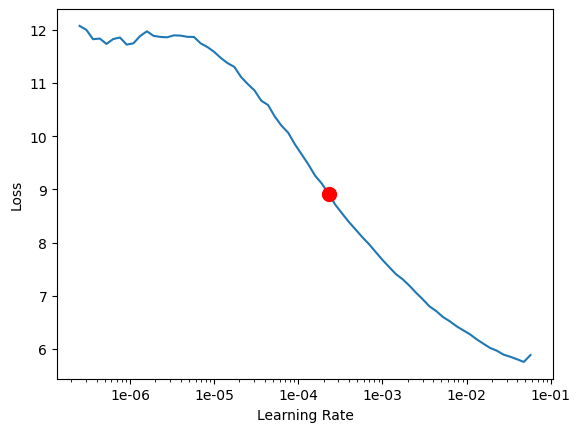

model = MaskRCNN(data)Learning rate is one of the most important hyperparameters in model training. We will use the lr_find() method to find an optimum learning rate at which we can train a robust model.

lr = model.lr_find()

lr

0.00022908676527677726

Train the model

We are using the suggested learning rate above to train the model for 10 epochs.

model.fit(10,lr=lr)| epoch | train_loss | valid_loss | average_precision | time |

|---|---|---|---|---|

| 0 | 2.454551 | 2.558831 | 0.343144 | 05:27 |

| 1 | 2.293052 | 2.523995 | 0.411870 | 05:26 |

| 2 | 2.210047 | 2.396595 | 0.496738 | 05:26 |

| 3 | 2.092025 | 2.199128 | 0.545330 | 05:27 |

| 4 | 1.965939 | 2.174633 | 0.589573 | 05:27 |

| 5 | 1.905704 | 2.078115 | 0.624616 | 05:29 |

| 6 | 1.860759 | 1.990601 | 0.639107 | 05:28 |

| 7 | 1.831560 | 1.940256 | 0.665038 | 05:26 |

| 8 | 1.733887 | 1.936229 | 0.678185 | 05:26 |

| 9 | 1.714532 | 1.923090 | 0.678643 | 05:28 |

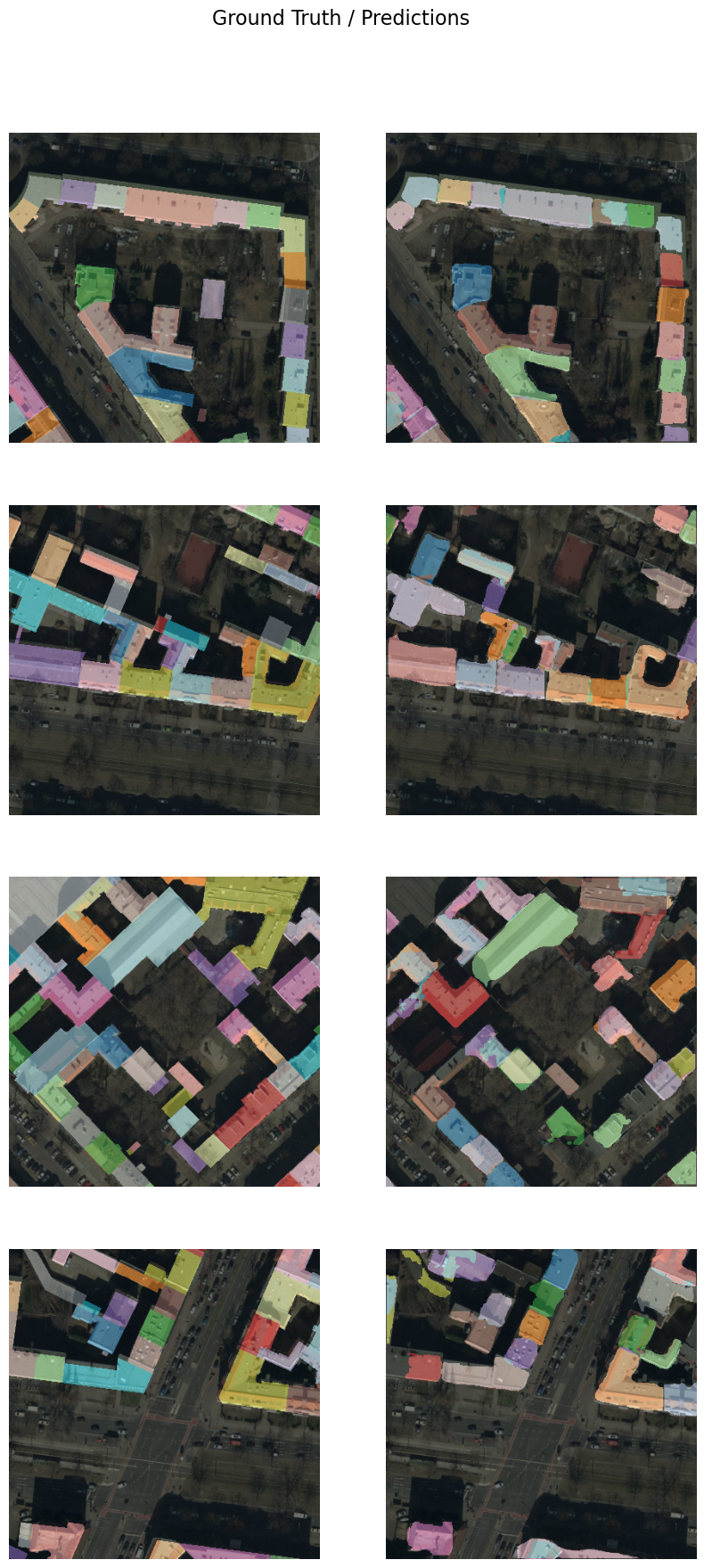

Visualize detected building footprints

The model.show_results() method can be used to display the detected building footprints. Each detection is visualized as a mask by default.

model.show_results()

We can set the mode parameter to bbox_mask for visualizing both mask and bounding boxes.

model.show_results(mode='bbox_mask')

Save model

As we can see, with 10 epochs, we are already seeing reasonable results. Further improvments can be achieved through more sophisticated hyperparameter tuning. Let's save the model, so it can be used for inference, or further training subsequently. By default, it will be saved into your data_path that you specified in the very beginning of this notebook, in the prepare_data call.

model.save('Building_footprint_10epochs', publish=True)Model inference

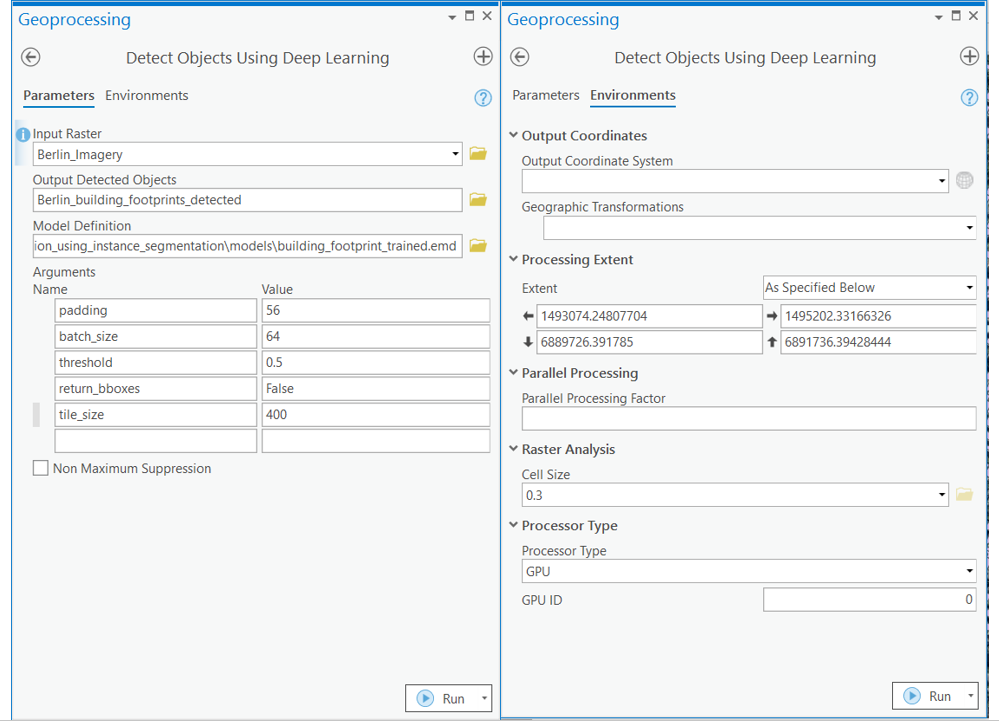

The saved model can be used to extract building footprint masks using the 'Detect Objects Using Deep Learning' tool available in ArcGIS Pro, or ArcGIS Enterprise. For this sample we will use high satellite imagery to detect footprints.

arcpy.ia.DetectObjectsUsingDeepLearning("Berlin_Imagery", r"\\ArcGIS\Projects\maskrcnn_inferencing\maskrcnn_inferencing.gdb\maskrcnn_detections", r"\\models\building_footprint_10epochs\building_footprint_10epochs.emd", "padding 100;batch_size 4;threshold 0.9;return_bboxes False", "NO_NMS", "Confidence", "Class", 0, "PROCESS_AS_MOSAICKED_IMAGE")

The output of the model is a layer of detected building footprints. However, the detected building footprints need to be post-processed using the Regularize Building Footprints tool. This tool normalizes the footprint of building polygons by eliminating undesirable artifacts in their geometry. The post-processed building footprints are shown below:

A subset of detected building footprints

To view the above results in a webmap click here.

This sample showcases how instance segmentation models like MaskRCNN can be used to automatically extract building footprints in areas where buildings are touching each other. This approach overcomes the limitations of semantic segmentation models, which work well only when buildings are separated from each other by some distance.