Accelerating AI with GPUs

In this guide, we will walk you through how arcgis.learn models with PyTorch backend, support training across multiple GPUs.

You can verify how many GPUs are available to PyTorch by runing the following commands:

import torch print ('Available devices ', torch.cuda.device_count())

PyTorch provides capabilities to utilize multiple GPUs in two ways:

- Data Parallelism

- Model Parallelism

arcgis.learn uses one of the two ways to train models using multiple GPUs.

Each of the two ways has its own significance and both offer an easy means of wrapping your code to add the capability of training the model on multiple GPUs.

Data Parallelism: Data Parallelism is when we split the mini-batch of samples into multiple smaller mini-batches and run the computation for each of the smaller mini-batches in parallel across multiple GPUs.

Data Parallelism is implemented using torch.nn.DataParallel. You can wrap a Module in DataParallel and it will be parallelized over multiple GPUs in the batch dimension. For more details click here.

For certain models, arcgis.learn models already provide support for data parallelism in order to enhance model performance. This makes it easy for users to utilize multiple GPUs while training model on a single machine. Below is a detailed list of the models that have DataParallel support.

FeatureClassifierMaskRCNNMultiTaskRoadExtractorConnectNetPointCNNSingleShotDetetorUnetClassifier

You can set a subset of GPUs to be used for training your model by running the following command in the first cell:

import os os.environ['CUDA_VISIBLE_DEVICES'] = '0,1' # for setting first 2 GPUs

Model parallelism: is when we split a model between multiple devices or nodes (such as GPU-equipped instances) for creating an efficient training pipeline to maximize GPU utilization. Model parallelism is implemented using torch.nn.DistributedDataParallel.

This approach parallelizes the application of the given module by splitting the input across the specified devices by chunking in the batch dimension. The module is replicated on each machine and each device, and each such replica handles a portion of the input. During the backwards pass, gradients from each node are averaged. To read more, click here.

Currently, the following models available in arcgis.learn support DistributedDataParallel:

UnetClassifierDeepLabPSPNetClassifierMaskRCNNFasterRCNNHEDEdgeDetectorBDCNEdgeDetectorModelExtension

The following blocks of code download a script and training data that can be used to test the functionality of multi-gpu support, given a user has multiple GPUs.

from arcgis.gis import GISgis = GIS()script_item = gis.content.get('afd1c9a88a6f4f04896b4172c0f3a78c')script_item.name'train_model.zip'

filepath = script_item.download(file_name=script_item.name)import zipfile

import os

from pathlib import Path

with zipfile.ZipFile(filepath, 'r') as zip_ref:

zip_ref.extractall(Path(filepath).parent)script_path = Path(os.path.join(os.path.splitext(filepath)[0]) + '.py')script_pathWindowsPath('C:/Users/Admin/AppData/Local/Temp/train_model.py')To run multiple GPUs while training your model, you can download the script from above and/or create one for your own training data. Execute the command shown below in your command prompt:

python -m torch.distributed.launch --nproc_per_node=2 train-model.py

nproc_per_node = number of GPU instances per machine.

For detailed arguments of (Distributed data Parallel)DDP, please refer to this page.

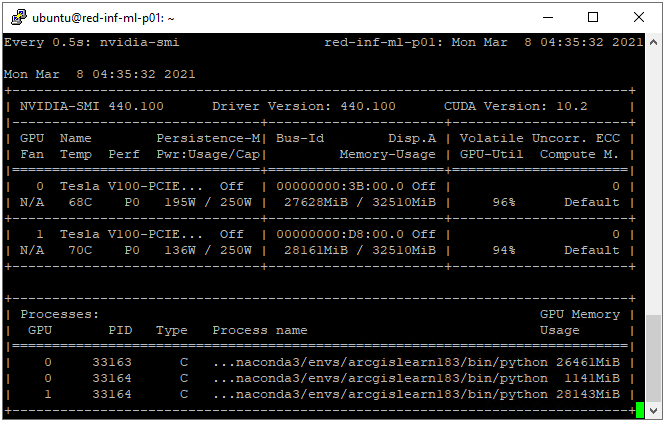

To verify that your GPUs are utilized for training, run nvidia-smi as shown below:

References:

- https://www.esri.com/arcgis-blog/products/arcgis-pro/imagery/deep-learning-with-arcgis-pro-tips-tricks/

- https://pytorch.org/tutorials/intermediate/ddp_tutorial.html

- https://fastai1.fast.ai/distributed.html

- https://github.com/pytorch/pytorch/blob/master/torch/distributed/launch.py

- https://towardsdatascience.com/how-to-scale-training-on-multiple-gpus-dae1041f49d2