ST_Generalize takes a linestring or polygon geometry column and a numeric tolerance and returns a geometry column. This function generalizes the input linestring or polygon geometry using the Douglas-Peucker algorithm with the specified tolerance. The result is the input geometry generalized to include only a subset of the original geometry's vertices. Point and multipoint geometry types are not supported as input.

The tolerance can be specified with or without a unit. When specified with a unit, the tolerance can be defined using

ST_CreateDistance or with a tuple containing a number and a unit string

(e.g., (10, "kilometers")). When specified without a unit, the tolerance can be a single value or a numeric column,

and it is assumed to be in the same units as the input geometry.

| Function | Syntax |

|---|---|

| Python | generalize(geometry, tolerance) |

| SQL | ST |

| Scala | generalize(geometry, tolerance) |

For more details, go to the GeoAnalytics Engine API reference for generalize.

Python and SQL Examples

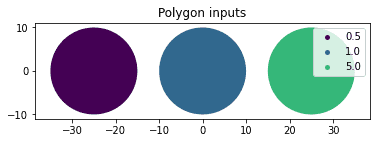

from geoanalytics.sql import functions as ST, Point

data = [

(Point(-25,0), 0.5),

(Point(0,0), 1.0),

(Point(25,0), 5.0)

]

df = spark.createDataFrame(data, ["point", "tolerance"])

df_buffer = df.withColumn("buffer", ST.buffer("point", 10))

axes = df_buffer.st.plot(geometry="buffer", cmap_values="tolerance", is_categorical=True, \

legend=True, aspect = "equal")

axes.set_title("Polygon inputs")

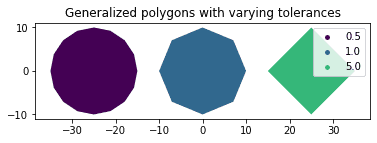

result = df_buffer.select(ST.generalize("buffer", "tolerance"), "tolerance")

axes = result.st.plot( cmap_values="tolerance", is_categorical=True, legend=True, aspect = "equal")

axes.set_title("Generalized polygons with varying tolerances");

Scala examples

import com.esri.geoanalytics.geometry._

import com.esri.geoanalytics.sql.{functions => ST}

import org.apache.spark.sql.{functions => F}

case class PointRow(point: Point, tolerance: Double)

val data = Seq(PointRow(Point(-25, 0), 0.5),

PointRow(Point(0, 0), 1.0),

PointRow(Point(25, 0), 5.0))

val df = spark.createDataFrame(data)

.withColumn("buffer", ST.buffer($"point", 10))

.select(ST.generalize($"buffer", $"tolerance").alias("generalize"))

.withColumn("generalized_polygon_area", F.round(ST.area($"generalize"),3))

df.show()+--------------------+------------------------+

| generalize|generalized_polygon_area|

+--------------------+------------------------+

|{"rings":[[[-15,0...| 306.147|

|{"rings":[[[10,0]...| 282.843|

|{"rings":[[[35,0]...| 200.0|

+--------------------+------------------------+Version table

| Release | Notes |

|---|---|

1.0.0 | Python and SQL functions introduced |

1.5.0 | Scala function introduced |

1.6.0 | tolerance parameter accepts values specified with units |