- 🔬 Data Science

- 🥠 Deep Learning and Image Classification

Introduction

Automatic animal identification can improve biology missions that require identifying species and counting individuals, such as animal monitoring and management, examining biodiversity, and population estimation.

This notebook will showcase a workflow to classify animal species in camera trap images. The notebook has two main sections:

- Training a deep learning model that can classify animal species

- Using the trained model to classify animal species against each camera location

We have used a subset of a community licensed open source dataset for camera trap images, provided under the LILA BC (Labeled Information Library of Alexandria: Biology and Conservation) repository, to train our deep learning model, which is further detailed in this section. For inferencing, we have taken 5 fictional camera locations in Kruger National Park, South Africa, and to each of those points, we have attached some images. This feature layer simulates a scenario where there are multiple cameras at different locations that have captured images that need to be classified for animal species. The whole workflow enabling this is explained in this section.

Note: This notebook is supported in ArcGIS Enterprise 10.9 and later.

Necessary imports

import os

import json

import zipfile

import pandas as pd

from pathlib import Path

from fastai.vision import ImageDataBunch, get_transforms, imagenet_stats

from arcgis.gis import GIS

from arcgis.learn import prepare_data, FeatureClassifier, classify_objects, ModelConnect to your GIS

# connect to web GIS

gis = GIS("Your_enterprise_profile") # ArcGIS Enterprise 10.9 or laterGet the data for analysis

In this notebook, we have used the "WCS Camera Traps" dataset, made publicly available by the Wildlife Conservation Society under the LILA BC repository. This dataset contains approximately 1.4M camera trap images representing different species from 12 countries, making it one of the most diverse camera trap data sets publicly available. The dataset can be further explored and downloaded from this link. This data set is released under the Community Data License Agreement (permissive variant).

Data selection

This dataset has 1.4M images related to 675 different species, of which we have chosen 11 species whose conservation status was either "Endangered", "Near Threatened", or "Vulnerable". These species include Jaguars, African elephants, Lions, Thomson's gazelles, East African oryxs, Gerenuks, Asian elephants, Tigers, Ocellated turkeys, and Great curassows.

The json file (wcs_camera_traps.json) that comes with the data contains metadata we require.

The cell below converts the downloaded data into required format to be used by prepare_data().

# # list containing corrupted filenames from the data downloaded

# corrupted_files=['animals/0402/0905.jpg', 'animals/0407/1026.jpg', 'animals/0464/0880.jpg',

# 'animals/0464/0881.jpg', 'animals/0464/0882.jpg', 'animals/0464/0884.jpg',

# 'animals/0464/0888.jpg', 'animals/0464/0889.jpg', 'animals/0645/0531.jpg',

# 'animals/0645/0532.jpg', 'animals/0656/0208.jpg', 'animals/0009/0215.jpg']

# # list with selected 11 species ids

# retained_specie_list = [7,24,90,100,119,127,128,149,154,372,374]

# with open('path_to: wcs_camera_traps.json') as f:

# metadata = json.load(f)

# annotation_df = pd.DataFrame(metadata['annotations']) # load the annotations and images into a dataframe

# images_df = pd.DataFrame(metadata['images'])

# img_ann_df = pd.merge(images_df,

# annotation_df,

# left_on='id',

# right_on='image_id',

# how='left').drop('image_id', axis=1)

# train_df = img_ann_df[['file_name','category_id']] # selecting required columns from the merged dataframe.

# train_df = train_df[~train_df['file_name'].isin(corrupted_files)] # removing corrupted files from the dataframe

# train_df = train_df[~img_ann_df['file_name'].str.contains("avi")]

# # A 'category_id' of 0 indicates an image that does not contain an animal.

# # To reduce the class imbalance, we will only retain

# # ~50% of the empty images in our training dataset.

# new_train_df = train_df[train_df['category_id']==0].sample(frac=0.5,

# random_state=42)

# new_train_df = new_train_df.append(train_df[train_df['category_id']

# .isin(retained_specie_list)])Alternatively, we have provided a subset of training data containing a few samples from the 11 species mentioned above.

agol_gis = GIS("home")training_data = agol_gis.content.get('677f0d853c85430784169ce7a4a54037')

training_datafilepath = training_data.download(file_name=training_data.name)with zipfile.ZipFile(filepath, 'r') as zip_ref:

zip_ref.extractall(Path(filepath).parent)output_path = Path(os.path.join(os.path.splitext(filepath)[0]))new_train_df = pd.read_csv(str(output_path)+'/retained_data_subset.csv')new_train_df| file_name | category_id | |

|---|---|---|

| 0 | animals/0015/0738.jpg | 0 |

| 1 | animals/0057/1444.jpg | 0 |

| 2 | animals/0407/0329.jpg | 0 |

| 3 | animals/0321/1855.jpg | 0 |

| 4 | animals/0224/1005.jpg | 0 |

| ... | ... | ... |

| 1796 | animals/0389/1309.jpg | 374 |

| 1797 | animals/0389/1310.jpg | 374 |

| 1798 | animals/0389/1311.jpg | 374 |

| 1799 | animals/0389/1312.jpg | 374 |

| 1800 | animals/0389/1313.jpg | 374 |

1801 rows × 2 columns

Prepare data

Here, we are not using the prepare_data() function provided by arcgis.learn to prepare the data for analysis. Instead, we will use the ImageDataBunch.from_df method provided by fast.ai to read the necessary information from a dataframe and convert it into a 'DataBunch' object, which will then be used for training. We will use the standard set of transforms for data augmentation and use 20% of our training dataset as a validation dataset. It is important to note that we are also normalizing our inputs.

Path_df=Path(output_path) # path to the downloaded data

data = ImageDataBunch.from_df(path=Path_df,

folder='',

df=new_train_df,

fn_col=0,

label_col=1,

valid_pct=0.2,

seed=42,

bs=16,

size=224,

num_workers=2).normalize(imagenet_stats)Visualize a few samples from your training data

When working with large sets of jpeg images, it is possible that some images will only be available as a stream coming from an image and will not be complete. If these image streams are present in the dataset, they could potentially break the training process. To ensure that the training flow does not break, we set the LOAD_TRUNCATED_IMAGES parameter to True to indicate that the model should train on whatever image stream is available.

from PIL import ImageFile

ImageFile.LOAD_TRUNCATED_IMAGES = Truedata.show_batch(5)

Train the model

Load model architecture

The arcgis.learn module provides the ability to determine the class of each feature in the form of the FeatureClassifier model. For more in-depth information about it's working and usage, see this link. For our training, we will use a model with the 34 layered 'ResNet' architecture.

model = FeatureClassifier(data)Find an optimal learning rate

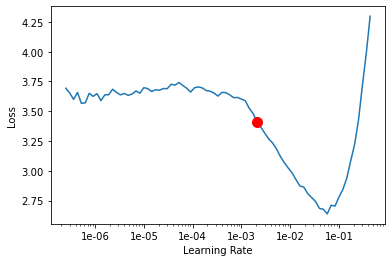

Learning rate is one of the most important hyperparameters in model training. Here, we explore a range of learning rates to guide us to choosing the best one. arcgis.learn leverages fast.ai’s learning rate finder to find an optimum learning rate for training models. We will use the lr_find() method to find the optimum learning rate at which we can train a robust model, while still considering the speed and efficiency of the model.

lr = model.lr_find()

lr0.0020892961308540407

Based on the learning rate plot above, we can see that the learning rate suggested by lr_find() for our training data is 0.002. We can now use this learning rate to train our model. However, in the latest release of arcgis.learn, we can now train models without even specifying a learning rate. This new functionality will use the learning rate finder to determine and implement an optimal learning rate.

Fit the model

To train the model, we use the fit() method. To start, we will use 5 epochs to train our model. The number of epochs specified will define how many times the model is exposed to the entire training set.

Note: The results displayed below are obtained after training on the complete dataset belonging to the 11 species specified above. However, the dummy training data provided may not produce similar results.

model.fit(5, lr=lr)| epoch | train_loss | valid_loss | accuracy | time |

|---|---|---|---|---|

| 0 | 1.585832 | 0.393099 | 0.869444 | 03:12 |

| 1 | 0.687256 | 0.292758 | 0.911111 | 03:06 |

| 2 | 0.380574 | 0.211873 | 0.938889 | 03:04 |

| 3 | 0.201335 | 0.199844 | 0.947222 | 03:10 |

| 4 | 0.162608 | 0.182794 | 0.947222 | 03:02 |

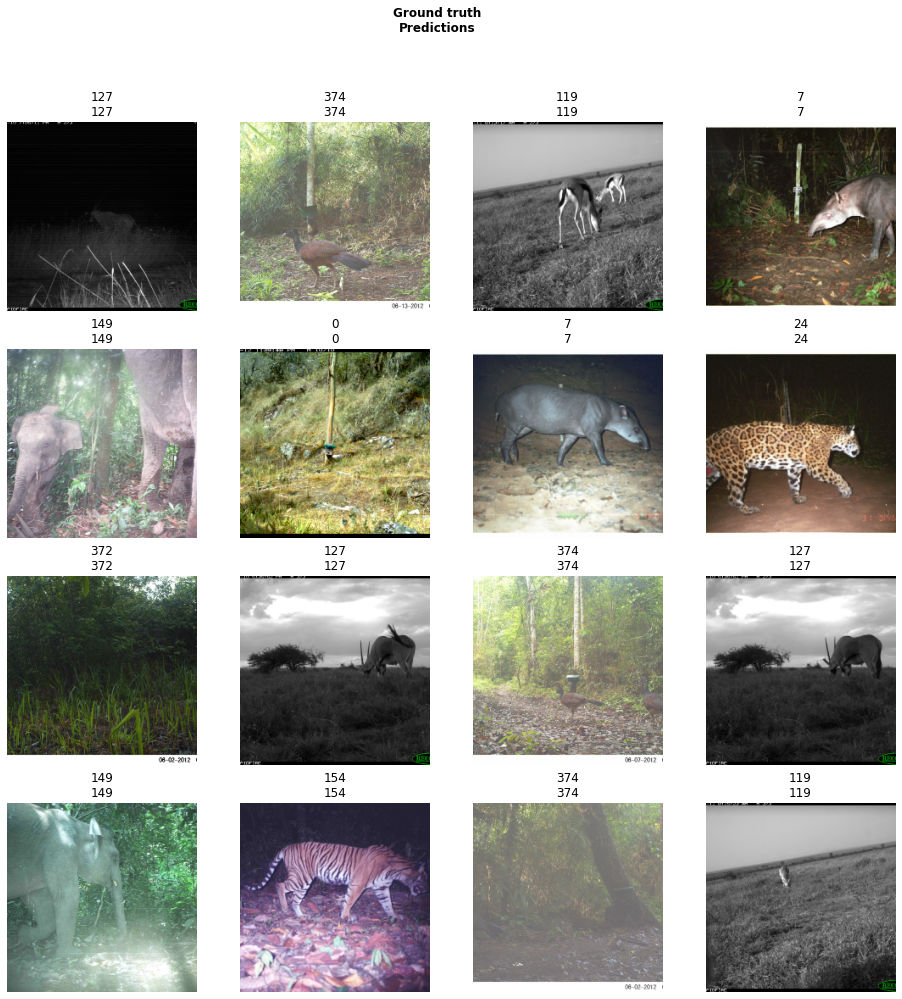

Visualize results in validation set

Notice that after training our model for 5 epochs, we are already seeing ~95% accuracy on our validation dataset. We can further validate this by selecting a few random samples and displaying the ground truth and respective model predictions side by side. This allows us to validate the results of the model in the notebook itself. Once satisfied, we can save the model and use it further in our workflow.

model.show_results(rows=8, thresh=0.2)

Here, a subset of ground truths from the training data is visualized alongside the predictions from the model. As we can see, our model is performing well, and the predictions are comparable to the ground truth.

Save the model

We will use the save() method to save the trained model. By default, it will be saved to the 'models' sub-folder within our training data folder.

model.save('Wildlife_identification_model', publish=True, overwrite=True)Model inference

This section demonstrates the application of our trained animal species classification model on the images stored as feature attachments.

Data preparation

To start, we have a feature class named "CameraTraps" that contains 5 features that are hosted on our enterprise account. We will consider these features to be camera locations. We also have multiple locally stored images for each of these camera locations that we want to use against our model.

# Accessing the feature layer on the enterprise.

search_result = gis.content.search("title: CameraTraps owner:portaladmin",

"Feature Layer")

cameratraps = search_result[0]

cameratraps# Cloning the item to local enterprise account

gis.content.clone_items([cameratraps])[<Item title:"CameraTraps" type:Feature Layer Collection owner:portaladmin>]

search_result = gis.content.search("title: CameraTraps owner:portaladmin",

"Feature Layer")

cameratraps_clone = search_result[0]

cameratraps_clonecr_lyr = cameratraps_clone.layers[0]In order to attach images to each of these camera locations, we need to enable attachments to the feature layer.

cr_lyr.manager.update_definition({"hasAttachments": True}){'success': True}cr_lyr.query().sdf| globalid | objectid | SHAPE | |

|---|---|---|---|

| 0 | {099AC399-BBA2-4D27-BB86-3039DBEE1547} | 1 | {"x": 3544922.6347999983, "y": -2774718.7709, ... |

| 1 | {878DE63D-1922-4AD5-9E92-D645119B1083} | 2 | {"x": 3522933.4897000007, "y": -2791843.061000... |

| 2 | {1339C84F-DBBC-40C1-BE30-021AAA443DAA} | 3 | {"x": 3550955.0551999994, "y": -2813053.829399... |

| 3 | {4878288B-51BA-4E86-B583-89B12DC5524F} | 4 | {"x": 3541614.533399999, "y": -2845745.6558, "... |

| 4 | {C073C447-EF6F-42C3-914E-7906D3B9E587} | 5 | {"x": 3519430.7939999998, "y": -2867734.800999... |

Now, we can specify the path on the local drive where images are stored against each camera location. One important point to note here, is that the directory structure of the data folder path should have separate folders with the 'objectid' of the camera location points as their names and that contain all of the photos captured by that camera.

In our case, as we have 5 camera locations, we had a data folder containing 5 different folders with the names 1, 2, 3, 4, and 5 as the 'objectid' of the points (see the spatial dataframe table above). Each of these folders had multiple images captured by the cameras. Below is a diagram of the folder structure mentioned:

# path to images captured by each of those camera locations.

path = r'./Camera Traps/data'As there could be multiple images captured at each point, we need to create a separate feature for each image, and then attach the image to the feature added.

for folder in os.listdir(path):

count=1

oid=folder

for img in os.listdir(os.path.join(path,folder)):

if count > 1:

cr_lyr.edit_features(adds=[cr_lyr.query(where='objectId='+folder).features[0]])

oid = cr_lyr.query().features[-1].attributes.get('objectid')

print("Adding "+str(img)+" captured by camera number "+str(folder)+" to feature id number "+str(oid))

cr_lyr.attachments.add(oid, os.path.join(path, folder, img))

count+=1Adding 0978.jpg captured by camera number 1 to feature id number 1 Adding 1085.jpg captured by camera number 1 to feature id number 6 Adding 1086.jpg captured by camera number 1 to feature id number 7 Adding 1154.jpg captured by camera number 1 to feature id number 8 Adding 1155.jpg captured by camera number 1 to feature id number 9 Adding 1094.jpg captured by camera number 2 to feature id number 2 Adding 1095.jpg captured by camera number 2 to feature id number 10 Adding 1385.jpg captured by camera number 2 to feature id number 11 Adding 1386.jpg captured by camera number 2 to feature id number 12 Adding 1387.jpg captured by camera number 2 to feature id number 13 Adding 1105.jpg captured by camera number 3 to feature id number 3 Adding 1106.jpg captured by camera number 3 to feature id number 14 Adding 1332.jpg captured by camera number 3 to feature id number 15 Adding 1336.jpg captured by camera number 3 to feature id number 16 Adding 1338.jpg captured by camera number 3 to feature id number 17 Adding 1339.jpg captured by camera number 3 to feature id number 18 Adding 1340.jpg captured by camera number 3 to feature id number 19 Adding 0339.jpg captured by camera number 4 to feature id number 4 Adding 0342.jpg captured by camera number 4 to feature id number 20 Adding 0343.jpg captured by camera number 4 to feature id number 21 Adding 1040.jpg captured by camera number 4 to feature id number 22 Adding 1041.jpg captured by camera number 4 to feature id number 23 Adding 1043.jpg captured by camera number 4 to feature id number 24 Adding 1078.jpg captured by camera number 5 to feature id number 5 Adding 1079.jpg captured by camera number 5 to feature id number 25 Adding 1080.jpg captured by camera number 5 to feature id number 26 Adding 1088.jpg captured by camera number 5 to feature id number 27 Adding 1089.jpg captured by camera number 5 to feature id number 28 Adding 1090.jpg captured by camera number 5 to feature id number 29 Adding 1102.jpg captured by camera number 5 to feature id number 30 Adding 1403.jpg captured by camera number 5 to feature id number 31

Note that there are separate entries in the feature class for each image captured.

cr_lyr.query().sdf| globalid | objectid | SHAPE | |

|---|---|---|---|

| 0 | {099AC399-BBA2-4D27-BB86-3039DBEE1547} | 1 | {"x": 3544922.6347999983, "y": -2774718.7709, ... |

| 1 | {878DE63D-1922-4AD5-9E92-D645119B1083} | 2 | {"x": 3522933.4897000007, "y": -2791843.061000... |

| 2 | {1339C84F-DBBC-40C1-BE30-021AAA443DAA} | 3 | {"x": 3550955.0551999994, "y": -2813053.829399... |

| 3 | {4878288B-51BA-4E86-B583-89B12DC5524F} | 4 | {"x": 3541614.533399999, "y": -2845745.6558, "... |

| 4 | {C073C447-EF6F-42C3-914E-7906D3B9E587} | 5 | {"x": 3519430.7939999998, "y": -2867734.800999... |

| 5 | {D39CC217-68BE-4FF9-9937-542E83054830} | 6 | {"x": 3544922.6347999983, "y": -2774718.7709, ... |

| 6 | {8BBF1714-FAB3-48DE-9701-13DF0A884A5F} | 7 | {"x": 3544922.6347999983, "y": -2774718.7709, ... |

| 7 | {83CEAE78-2EB8-4592-9D49-E9635DAE41F6} | 8 | {"x": 3544922.6347999983, "y": -2774718.7709, ... |

| 8 | {D4C7AFF6-A209-4E3F-AB49-4EDA9BFE491D} | 9 | {"x": 3544922.6347999983, "y": -2774718.7709, ... |

| 9 | {945B4D1A-4F7D-4A73-A912-5B0FFAF8BC92} | 10 | {"x": 3522933.4897000007, "y": -2791843.061000... |

| 10 | {42F2011B-709D-406D-AA18-8E4D4470C5C2} | 11 | {"x": 3522933.4897000007, "y": -2791843.061000... |

| 11 | {0B12B508-CE71-4960-8124-59FB2CD2A7B3} | 12 | {"x": 3522933.4897000007, "y": -2791843.061000... |

| 12 | {9ADD7F23-1D2A-4A17-932D-320DBAD31322} | 13 | {"x": 3522933.4897000007, "y": -2791843.061000... |

| 13 | {0555984E-23DD-4BB3-9E55-FDFDD9DCA0F9} | 14 | {"x": 3550955.0551999994, "y": -2813053.829399... |

| 14 | {EFB885B5-AFEE-48AE-B649-AD3DF9374EA4} | 15 | {"x": 3550955.0551999994, "y": -2813053.829399... |

| 15 | {4E664324-597C-4785-9970-7D8C61EBF824} | 16 | {"x": 3550955.0551999994, "y": -2813053.829399... |

| 16 | {B06190FA-73C6-49A1-B0D0-C5727F15EFD7} | 17 | {"x": 3550955.0551999994, "y": -2813053.829399... |

| 17 | {D42BB9DB-6E67-4622-A017-D9ECBD7F7EB7} | 18 | {"x": 3550955.0551999994, "y": -2813053.829399... |

| 18 | {28387C2F-312D-496F-A85E-1D995EEAF155} | 19 | {"x": 3550955.0551999994, "y": -2813053.829399... |

| 19 | {680C76AE-FE3D-464B-AC9B-B076C0C77F53} | 20 | {"x": 3541614.533399999, "y": -2845745.6558, "... |

| 20 | {3664B7E4-046E-4866-B470-3645EE0F006B} | 21 | {"x": 3541614.533399999, "y": -2845745.6558, "... |

| 21 | {E87589AE-AFBC-4A03-ACD4-05FCC1A9A1F3} | 22 | {"x": 3541614.533399999, "y": -2845745.6558, "... |

| 22 | {C8A53B0A-6ECD-4B7C-BAD1-E3F830D340C5} | 23 | {"x": 3541614.533399999, "y": -2845745.6558, "... |

| 23 | {2BE5E38D-3D65-42A0-B8A1-2EF5EB0EB0DA} | 24 | {"x": 3541614.533399999, "y": -2845745.6558, "... |

| 24 | {20D5496F-5426-492F-B610-669E7C785897} | 25 | {"x": 3519430.7939999998, "y": -2867734.800999... |

| 25 | {510B7CA3-0815-41AE-99AA-44523B231AFF} | 26 | {"x": 3519430.7939999998, "y": -2867734.800999... |

| 26 | {3F4F969C-89E8-491B-BC59-101736E9141F} | 27 | {"x": 3519430.7939999998, "y": -2867734.800999... |

| 27 | {2A400FB6-DA4F-430E-9716-F3F213E8796F} | 28 | {"x": 3519430.7939999998, "y": -2867734.800999... |

| 28 | {0967A49C-79E0-4CC8-86F6-1C3EC8082286} | 29 | {"x": 3519430.7939999998, "y": -2867734.800999... |

| 29 | {5776EBB7-443A-40ED-8E00-C18134BA98DD} | 30 | {"x": 3519430.7939999998, "y": -2867734.800999... |

| 30 | {61474AB7-E560-4896-BDD3-B803F8563165} | 31 | {"x": 3519430.7939999998, "y": -2867734.800999... |

# To check attachment at feature id - 1

cr_lyr.attachments.get_list(1)[{'data_size': 598813,

'exifInfo': None,

'size': 598813,

'content_type': 'image/jpeg',

'keywords': '',

'name': '0978.jpg',

'globalid': '{4CE93666-F7E2-4364-A54D-6DF70094B37E}',

'id': 1,

'attachmentid': 1,

'att_name': '0978.jpg',

'contentType': 'image/jpeg'}]Inferencing

Next, we will access the published model from the gis server. This model is trained on the entire dataset containing samples belonging to 11 species.

fc_model_package = gis.content.search("title: Wildlife_identification_model owner:portaladmin",

item_type='Deep Learning Package')[0]

fc_model_packagefc_model = Model(fc_model_package)fc_model.install()'[resources]models\\raster\\95dab3c1ad224a1ea3176e4f1af24f28\\Wildlife_identification_model.emd'

fc_model.query_info(){'modelInfo': '{"Framework":"arcgis.learn.models._inferencing","ModelType":"ObjectClassification","ParameterInfo":[{"name":"rasters","dataType":"rasters","value":"","required":"1","displayName":"Rasters","description":"The collection of overlapping rasters to objects to be classified"},{"name":"model","dataType":"string","required":"1","displayName":"Input Model Definition (EMD) File","description":"Input model definition (EMD) JSON file"},{"name":"device","dataType":"numeric","required":"0","displayName":"Device ID","description":"Device ID"},{"name":"batch_size","dataType":"numeric","required":"0","value":"4","displayName":"Batch Size","description":"Batch Size"}]}'}Now that our data is prepared and the model is installed, we can make use of the classify_objects tool provided by ArcGIS API for Python to classify each of the image attachments using the model we trained earlier in this step.

inferenced_item = classify_objects(input_raster=cr_lyr,

model = fc_model,

model_arguments={'batch_size': 4},

output_name="inferenced_cameratraps",

class_value_field='ClassLabel',

context={'processorType':'GPU'},

gis=gis)

inferenced_itemConclusion

In this notebook, we saw how we can train a deep learning model to classify camera trap images. We have used fast.ai's 'ImageDataBunch.from_df' function to prepare our data from the dataframe we created and used the FeatureClassifier model provided by arcgis.learn to train a feature classifier model. We also worked with feature layer attachments to attach multiple points to features and classify the attachment images linked to them.

This notebook is a clear example showcasing how easily the functions provided by arcgis.learn can be used interchangeably with the functions provided by other vision APIs.