- 🔬 Data Science

- 🥠 Deep Learning and pixel-based classification

Introduction

Building footprints is a required layer in lot of mapping exercises, for example in basemap preparation, humantitarian aid and disaster management, transportation and a lot of other applications it is a critical component.Traditionally GIS analysts delineate building footprints by digitizing aerial and high resolution satellite imagery.

This sample shows how ArcGIS API for Python can be used to train a deep learning model to extract building footprints from drone data. The models trained can be used with ArcGIS Pro or ArcGIS Enterprise and even support distributed processing for quick results.

For this sample we will be using data which originates from USAA and covers the region affected by Woolsey fires. The Imagery used here is an orthomosaic of data acquired just after the fires using an optical sensor on drone with a spatial resolution of 30cm.

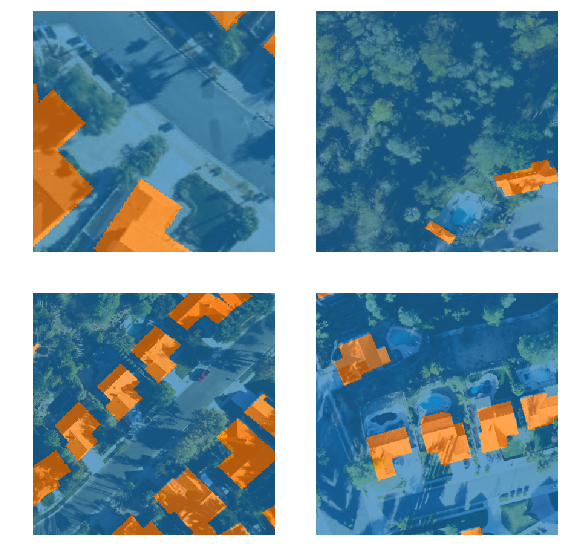

A subset of Drone Imagery Used overlayed with Training Data (Building Footprint Layer).

In this workflow we will basically have three steps.

- Export Training Data

- Train a Model

- Deploy Model and Extract Footprints

Export Training Data

Training data can be exported by using the 'Export Training Data For Deep Learning' tool available in ArcGIS Pro as well as ArcGIS Enterprise. For this example we prepared training data in 'Classified Tiles' format using a 'chip_size' of 400px and 'cell_size' of 30cm in ArcGIS Pro.

Rasterize Building Footprints

Rasterize building footprints using 'Polgon to Raster' tool.

Rasterize Building Footprints

arcpy.conversion.PolygonToRaster("Building_Footprints", "OBJECTID", r"C:\sample\sample.gdb\Building_Footprints_PolygonToRaster", "CELL_CENTER", "NONE", 0.3)

Reclassify the Rasterized Building Footprints

Convert all NoData values to '0' and other values to '1' using Reclassify Raster tool. After selecting input raster click on 'classify' and set only one class. For the class with 'valid data range' Set 'New' value to '1' for 'NoData' set 'New' value to '0'.

Reclassify Raster

arcpy.ddd.Reclassify("Building_Footprints_PolygonToRaster", "Value", "1 1;NODATA 0", r"C:\sample\sample.gdb\Building_Footprints_PolygonToRaster_reclass", "DATA")

Convert to a Themetic Raster with Pixel Type '8bit unsigned'

Export the reclassified raster from the previous step to a '8bit unsigned' raster.

Export Raster

arcpy.management.CopyRaster(r"C:\sample\sample.gdb\Building_Footprints_PolygonToRaster_reclass", r"C:\sample\sample.gdb\Building_Footprints_PolygonToRaster_reclass_8bitu", '', None, '', "NONE", "NONE", "8_BIT_UNSIGNED", "NONE", "NONE", "GRID", "NONE", "CURRENT_SLICE", "NO_TRANSPOSE")

Open 'Catalog Pane' > navigate to the raster you just exported > Right Click and select 'Properties'. In the 'Raster Properties' pane change the source type to 'Themetic'.

Set the Source Type of Raster to Thematic.

A Subset of our Themetic Raster generated by the previous steps.

Export training data

Export training data using 'Export Training data for deep learning' tool, detailed documentation here

- Set drone imagery as 'Input Raster'.

- Set a location where you want to export the training data, it can be an existing folder or the tool will create that for you.

- Set classified raster as 'Input Feature Class Or Classified Raster'.

- In the option 'Input Mask Polygons' we can set a mask layer to limit the tool to export training data for only those areas which have buildings in it, we created one by generating a grid of 200m by 200m on building footprint layer's extent and dissolving only those polygons which contained buildings to a single multipolygon feature.

- 'Tile Size X' & 'Tile Size Y' can be set

- Select 'Classified Tiles' as the 'Meta Data Format' because we are training an 'Unet Model'.

- In 'Environments' tab set an optimum 'Cell Size' which is small enough to allow model to learn the texture of building roofs by the details and big enough to allow multiple buildings to fall in one tile and model can also understand the surrounding context of the buildings. For this example we used 0.3 cell size which meant 30cm on a project coordinate system.

arcpy.ia.ExportTrainingDataForDeepLearning("Imagery", r"C:\sample\Data\Training Data 400px 30cm", "Building_Footprints_8bitu.tif", "TIFF", 400, 400, 0, 0, "ONLY_TILES_WITH_FEATURES", "Classified_Tiles", 0, None, 0, "grid_200m_Dissolve", 0, "MAP_SPACE", "NO_BLACKEN", "Fixed_Size")

This will create all the necessary files needed for the next step in the 'Output Folder', and we will now call it our training data.

Train the Model

This step would be done using jupyter notebook and documentation is available here to install and setup environment.

Required Imports

import os

from pathlib import Path

import arcgis

from arcgis.gis import GIS

from arcgis.learn import prepare_dataPrepare Data

We would specify the path to our training data and a few hyper parameters.

path: path of folder containing training data.

chip_size: Same as per specified while exporting training data

batch_size: No of images your model will train on each step inside an epoch, it directly depends on the memory of your graphic card. 8 worked for us on a 11GB GPU.

gis = GIS('home')training_data = gis.content.get('2f9058a37129460fac8a661c5d462a5f')

training_datafilepath = training_data.download(file_name=training_data.name)import zipfile

with zipfile.ZipFile(filepath, 'r') as zip_ref:

zip_ref.extractall(Path(filepath).parent)data_path = Path(os.path.join(os.path.splitext(filepath)[0]))# Prepare Data

data = prepare_data(path=data_path,

chip_size=400,

batch_size=8)

Visualise a few samples from your Training data

The code below shows a few samples of our data with the same symbology as in ArcGIS Pro.

rows: No of rows we want to see the results for.

alpha: controls the opacity of labels(Classified imagery) over the drone imagery

data.show_batch(rows=2, alpha=0.7)

Load an UnetClassifier model

The Code below will create an UnetClassifier model, it is based on a state of art deep learning model architecture 'U-net'. This type of model is used where 'pixel wise segmention' or in GIS terminology 'imagery classification' is needed, by default this model will be loaded on a pretrained resnet backbone.

# Create Unet Model

model = arcgis.learn.models.UnetClassifier(data)Find an Optimal Learning Rate

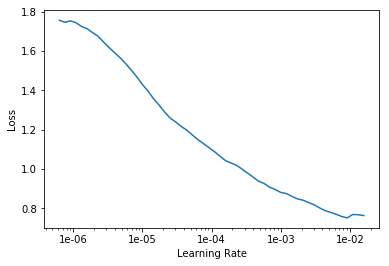

Optimization in deep learning is all about tuning 'hyperparameters'. In this step we will find an 'optimum learning rate' for our model on the training data. Learning rate is a very important parameter, while training our model it will see the training data several times and adjust itself (the weights of the network). Too high learning rate will lead to the convergence of our model to an suboptimal solution and too low learning can slow down the convergence of our model. We can use the lr_find() method to find an optimum learning rate at which can train a robust model fast enough.

# Find Learning Rate

model.lr_find()

The above method passes small batches of data to the model at a range of learning rates while records the losses for the respective learning rate after trainig. The result is this graph where loss has been plotted at y-axis with the respective learning rate at x-axis. Looking at the above graph we can see that the loss continously decreases after 1e-06 or .000001 and continous falling untill bumping back at 1e-02, we can now pick any value near to the center of this steepest decline. Now we will pick 1e-03 or .001 so that can be set the highest learning rate and lowest learning rate a tenth of it in the next step.

Fit the model

We would fit the model for 30 epochs. One epoch mean the model will see the complete training set once and so on.

# Training

model.fit(30, lr=slice(0.0001, 0.001))| epoch | train_loss | valid_loss | accuracy |

|---|---|---|---|

| 1 | 0.380910 | 0.240573 | 0.903260 |

| 2 | 0.280911 | 0.186109 | 0.924779 |

| 3 | 0.239853 | 0.174129 | 0.928293 |

| 4 | 0.217517 | 0.210512 | 0.917524 |

| 5 | 0.230968 | 0.214885 | 0.916372 |

| 6 | 0.230243 | 0.150260 | 0.944047 |

| 7 | 0.209158 | 0.163510 | 0.937708 |

| 8 | 0.237564 | 0.214373 | 0.926745 |

| 9 | 0.252157 | 0.176768 | 0.924209 |

| 10 | 0.235220 | 0.190232 | 0.933598 |

| 11 | 0.222909 | 0.193961 | 0.930031 |

| 12 | 0.198069 | 0.161947 | 0.944350 |

| 13 | 0.186451 | 0.140387 | 0.948500 |

| 14 | 0.179039 | 0.124703 | 0.951387 |

| 15 | 0.175097 | 0.117605 | 0.953894 |

| 16 | 0.161916 | 0.130282 | 0.949465 |

| 17 | 0.157149 | 0.125330 | 0.952347 |

| 18 | 0.161107 | 0.147009 | 0.952743 |

| 19 | 0.157675 | 0.121990 | 0.953016 |

| 20 | 0.151883 | 0.109569 | 0.958434 |

| 21 | 0.143869 | 0.109937 | 0.957520 |

| 22 | 0.140947 | 0.107090 | 0.959074 |

| 23 | 0.133209 | 0.109938 | 0.958108 |

| 24 | 0.136570 | 0.107677 | 0.958610 |

| 25 | 0.132041 | 0.104161 | 0.959987 |

| 26 | 0.130080 | 0.103443 | 0.960242 |

| 27 | 0.141798 | 0.103523 | 0.960438 |

| 28 | 0.130960 | 0.103431 | 0.959819 |

| 29 | 0.125838 | 0.102333 | 0.960349 |

| 30 | 0.121595 | 0.102537 | 0.960120 |

The full training data was split into training set and validation set at the Prepare Data step, by default the validation set is .2 or 20% of the full training data and the remaining 80% goes in the training set. Here we can see loss on both training and validatiaon set, it helps us to be aware about how well our model generalizes on data which it has never seen and prevent overfitting of the model. Also as we can see our model here is able to classify pixels to building or non building with an accuracy of 96%.

Save the model

We would now save the model which we just trained as a 'Deep Learning Package' or '.dlpk' format. Deep Learning package is the standard format used to deploy deep learning models on the ArcGIS platform. UnetClassifier models can be deployed by the tool 'Classify Pixels Using Deep Learning' available in ArcGIS Pro as well as ArcGIS Enterprise. For this sample we will using this model in ArcGIS Pro to extract building footprints.

We will use the save() method to save the model and by default it will be saved to a folder 'models' inside our training data folder itself.

# Save model to file

model.save('30e')Load an Intermediate model to train it further

If we need to further train an already saved model we can load it again using the code below and go back to Train the model step and further train it.

# Load Model from previous saved files

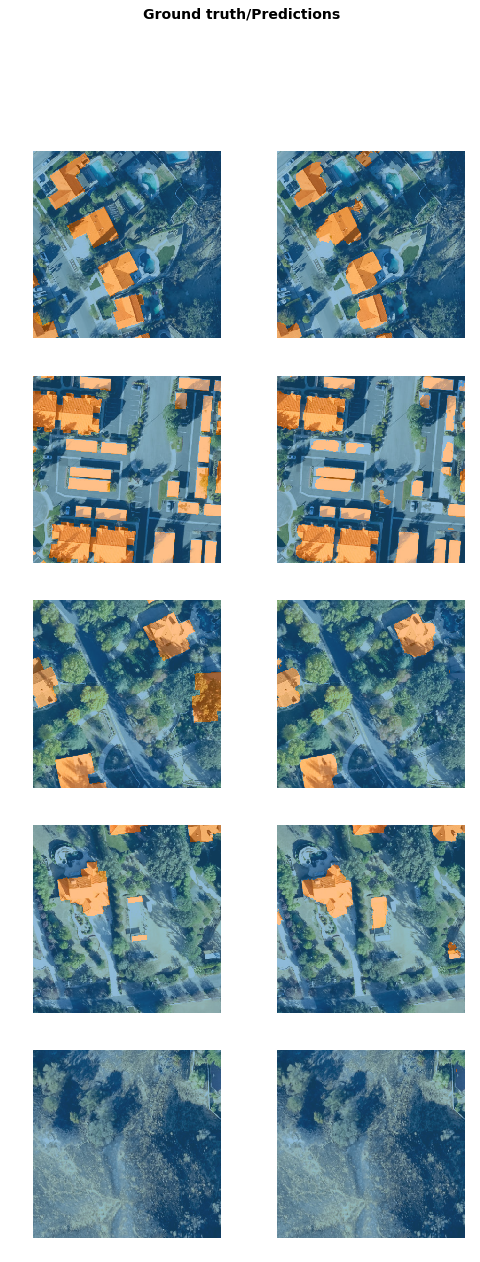

# model.load('30e')Preview Results

The code below will pick a few random samples and show us ground truth and respective model predictions side by side. This allows us to preview the results of your model in the notebook itself, once satisfied we can save the model and use it further in our workflow.

# Preview Results

model.show_results()

Deploy Model and Extract Footprints

The deep learning package saved in previous step can be used to extract classfied raster using 'Classify Pixels Using Deep Learning' tool. Further the classfied raster is regularised and finally converted to a vector Polygon layer. The regularisation step uses advanced ArcGIS geoprocessing tools to remove unwanted artifacts in the output.

Generate a 'Classified Raster' using 'Classify Pixels Using Deep Learning' tool

In this step we will generate a classfied raster using 'Classify Pixels Using Deep Learning' tool available in both ArcGIS Pro and ArcGIS Enterprise.

- Input Raster: The raster layer from which we want to extract building footprints from.

- Model Definition: It will be located inside the saved model in 'models' folder in '.emd' format.

- padding: The 'Input Raster' is tiled and the deep learning model classifies each individual tile separately before producing the final 'Output Classified Raster'. This may lead to unwanted artifacts along the edges of each tile as the model has little context to predict accuratly. Padding as the name suggests allows us to supply some extra information along the tile edges, this helps the model to predict better.

- Cell Size: Should be close to at which we trained the model, we specified that at the Export training data step .

- Processor Type: This allows to control wether the system's 'GPU' or 'CPU' would be used in to classify pixels, by 'default GPU' will be used if available.

- Parallel Processing Factor: This allows us to scale this tool, this tool can be scaled on both 'CPU' and 'GPU'. It specifies that the opration would be spread across how many 'cpu cores' in case of cpu based operation or 'no of GPU's' incase of GPU based operation.

out_classified_raster = arcpy.ia.ClassifyPixelsUsingDeepLearning("Imagery", r"C:\sample\Data\Training Data 400px 30cm\models\30e\30e.emd", "padding 100;batch_size 8");

out_classified_raster.save(r"C:\sample\sample.gdb\Imagery_ClassifyPixelsUsingD")

Output of this tool will be in form of a 'classified raster' containing both background and building footprints.

A subset of preditions by our Model

Streamline Postprocessing Workflow Using Model Builder

As postprocessing workflow below involves quite a few tools and the parameters set in these tools need to be experimented with to get optimum results. We would use model builder to streamline this for us and enable us iteratively change the parameters in this workflow.

Postprocessing workflow in Model Builder.

Tools used and important parameters:

- Majority Filter: Reduces noise in the classified raster, we would use 'four' neighbouring cells majority to smoothen the raster.

- Raster to Polygon: Vectorizes raster to polygon based on cell values, we will keep the 'Simplify Polygons' and 'Create Multipart Features' options unchecked.

- Eliminate: Removes selected records by merging them into nieghbouring features, we selected features below 10 sqm Area before using this tool to remove small unwanted noise that Vectorizes raster to polygon based on cell values, we will keep the 'Simplify Polygons' and 'Create Multipart Features' options unchecked.

- Buffer: To slightly increase coverage of buildings we would buffer them by '5cm'. Before buffering them we would select only building features this will remove background features from output of this tool.

- Regularize Building Footprints: This tool will produce the final finished results by shaping them to how actual buildings look. 'Method' for regularisation would be 'Right Angles and Diagnol' and the parameters 'tolerance', 'Densification' and 'Precision' are '1.5', '1', '0.25' and '2' respectively.

Attached below is the toolbox:

from arcgis.gis import GIS

gis = GIS(username='api_data_owner')

gis.content.get('4bbb65755af24ff895581b39dc1d73e9')Final Output

The final output will be in form of a feature class.

A subset of Extracted Building Footprints after Post Processing.

A few subsets of results overlayed on the drone imagery

Limitations

This type of model works well when buildings are seperated from each other by some distance. In some conditions where buildings are touching each other instance segmentation models should be tried as it would always give better results.