- 🔬 Data Science

- 🥠 Deep Learning and pixel-based classification

Introduction

Deep-Learning methods tend to perform well with high amounts of data as compared to machine learning methods which is one of the drawbacks of these models. These methods have also been used in geospatial domain to detect objects [1,2] and land use classification [3] which showed favourable results, but labelled input satellite data has always been an effortful task. In that regards, in this notebook we have attempted to use the supervised classification approach to generate the required volumes of data which after cleaning was used to come through the requirement of larger training data for Deep Learning model.

For this, we have considered detecting settlements for Saharanpur district in Uttar Pradesh, India. Settlements have their own importance to urban planners and monitoring them temporally can lay the foundation to design urban policies for any government.

Necessary imports

%matplotlib inline

import pandas as pd

from datetime import datetime

import matplotlib.pyplot as plt

import arcgis

from arcgis.gis import GIS

from arcgis.geocoding import geocode

from arcgis.learn import prepare_data, UnetClassifier

from arcgis.raster.functions import clip, apply, extract_band, colormap, mask, stretch

from arcgis.raster.analytics import train_classifier, classify, list_datastore_contentConnect to your GIS

gis = GIS(url='https://pythonapi.playground.esri.com/portal', username='arcgis_python', password='amazing_arcgis_123')Get the data for analysis

Search for Multispectral Landsat layer in ArcGIS Online. We can search for content shared by users outside our organization by setting outside_org to True.

landsat_item = gis.content.search('title:Multispectral Landsat', 'Imagery Layer', outside_org=True)[0]

landsat = landsat_item.layers[0]

landsat_itemSearch for India State Boundaries 2018 layer in ArcGIS Online. This layer has all the District boundaries for India at index - 2.

boundaries = gis.content.search('India District Boundaries 2018', 'Feature Layer', outside_org=True)[2]

boundariesdistrict_boundaries = boundaries.layers[2]

district_boundaries<FeatureLayer url:"https://pythonapi.playground.esri.com/portal/sharing/servers/12fc8ff238e7420ea43906a9a33a9aab/rest/services/IND_Boundaries_2018/FeatureServer/2">

m = gis.map('Saharanpur, India')

m.add_layer(district_boundaries)

m.legend = True

As this notebook is to detect settlements for Saharanpur district, you can filter the boundary for Saharanpur.

area = geocode("Saharanpur, India", out_sr=landsat.properties.spatialReference)[0]

landsat.extent = area['extent']We want to detect settlements for Saharanpur district, so we will apply a query to the boundary layer, by setting "OBJECTID = 09132". The code below brings imagery and feature layer on the same extent.

saharanpur = district_boundaries.query(where='ID=09132') # query for Saharanur district boundary

saharanpur_geom = saharanpur.features[0].geometry # Extracting geometry of Saharanpur district boundary

saharanpur_geom['spatialReference'] = {'wkid':4326} # Set the Spatial Reference

saharanpur.features[0].extent = area['extent'] # Set the extentGet the training points for training the classifier. These are 212 points in total marked against 5 different classes namely Urban, Forest, Agricultural, Water and Wasteland. These points are marked using ArcGIS pro and pulished on the gis server.

data = gis.content.search('classificationPointsSaharanpur', 'Feature Layer')[0]

dataFilter satellite Imagery based on cloud cover and time duration

In order to produce good results, it is important to select cloud free imagery from the image collection for a specified time duration. In this example we have selected all the images captured between 1 October 2018 to 31 December 2018 with cloud cover less than or equal to 5% for Saharanpur region.

selected = landsat.filter_by(where="(Category = 1) AND (cloudcover <=0.05)",

time=[datetime(2018, 10, 1),

datetime(2018, 12, 31)],

geometry=arcgis.geometry.filters.intersects(area))

df = selected.query(out_fields="AcquisitionDate, GroupName, CloudCover, DayOfYear",

order_by_fields="AcquisitionDate").sdf

df['AcquisitionDate'] = pd.to_datetime(df['AcquisitionDate'], unit='ms')

df| OBJECTID | AcquisitionDate | GroupName | CloudCover | DayOfYear | Shape_Length | Shape_Area | SHAPE | |

|---|---|---|---|---|---|---|---|---|

| 0 | 2360748 | 2018-10-02 05:18:12 | LC81460392018275LGN00_MTL | 0.0411 | 275 | 863211.750925 | 4.652535e+10 | {'rings': [[[8842440.179299999, 3627905.796800... |

| 1 | 2360749 | 2018-10-02 05:18:36 | LC81460402018275LGN00_MTL | 0.0007 | 275 | 851821.808928 | 4.530870e+10 | {'rings': [[[8799651.220800001, 3442773.486500... |

| 2 | 2365229 | 2018-10-09 05:24:26 | LC81470392018282LGN00_MTL | 0.0235 | 282 | 864249.005456 | 4.664003e+10 | {'rings': [[[8670522.289099999, 3627673.343800... |

| 3 | 2365230 | 2018-10-09 05:24:50 | LC81470402018282LGN00_MTL | 0.0104 | 282 | 852038.709661 | 4.533114e+10 | {'rings': [[[8627077.993299998, 3442759.749600... |

| 4 | 2363765 | 2018-10-25 05:24:31 | LC81470392018298LGN00_MTL | 0.0034 | 298 | 864003.480054 | 4.661440e+10 | {'rings': [[[8668674.6948, 3627854.217100002],... |

| 5 | 2363766 | 2018-10-25 05:24:55 | LC81470402018298LGN00_MTL | 0.0084 | 298 | 852052.926372 | 4.533265e+10 | {'rings': [[[8625255.243900001, 3442931.826300... |

| 6 | 2374779 | 2018-10-27 05:12:34 | LC81450402018300LGN00_MTL | 0.0003 | 300 | 851500.296316 | 4.527314e+10 | {'rings': [[[8969606.0125, 3442791.7321000025]... |

| 7 | 2382362 | 2018-11-10 05:24:34 | LC81470392018314LGN00_MTL | 0.0009 | 314 | 863994.276519 | 4.661226e+10 | {'rings': [[[8669263.2619, 3627728.654100001],... |

| 8 | 2383968 | 2018-11-12 05:12:36 | LC81450402018316LGN00_MTL | 0.0001 | 316 | 851603.254073 | 4.528346e+10 | {'rings': [[[8969738.007399999, 3442925.248800... |

| 9 | 2391317 | 2018-11-26 05:24:34 | LC81470392018330LGN00_MTL | 0.0031 | 330 | 863991.415360 | 4.661195e+10 | {'rings': [[[8669961.7306, 3627710.4842000008]... |

| 10 | 2391319 | 2018-11-26 05:24:58 | LC81470402018330LGN00_MTL | 0.0106 | 330 | 851938.390626 | 4.532084e+10 | {'rings': [[[8626659.587900002, 3442921.529799... |

| 11 | 2392531 | 2018-12-05 05:18:45 | LC81460402018339LGN00_MTL | 0.0005 | 339 | 851973.199621 | 4.532425e+10 | {'rings': [[[8799698.1228, 3442772.1602], [874... |

| 12 | 2397733 | 2018-12-14 05:12:32 | LC81450402018348LGN00_MTL | 0.0100 | 348 | 851383.857661 | 4.526085e+10 | {'rings': [[[8972363.073399998, 3442845.388999... |

| 13 | 2401123 | 2018-12-21 05:18:44 | LC81460402018355LGN00_MTL | 0.0222 | 355 | 851916.741276 | 4.531834e+10 | {'rings': [[[8800469.5092, 3442744.7710999995]... |

| 14 | 2401177 | 2018-12-28 05:24:31 | LC81470392018362LGN00_MTL | 0.0019 | 362 | 863720.224906 | 4.658332e+10 | {'rings': [[[8671754.256099999, 3627529.248599... |

| 15 | 2403690 | 2018-12-30 05:12:33 | LC81450402018364LGN00_MTL | 0.0086 | 364 | 851598.768218 | 4.528268e+10 | {'rings': [[[8972212.594099998, 3442761.256499... |

We can now select the image dated 02 October 2018 from the collection using its OBJECTID which is "2360748"

saharanpur_image = landsat.filter_by('OBJECTID=2360748') # 2018-10-02 m = gis.map('Saharanpur, India')

m.add_layer(apply(saharanpur_image, 'Natural Color with DRA'))

m.add_layer(saharanpur)

m.legend = True

m

As our area of interest is larger and can not be enclosed within the tile we have chosen, let's make a mosaic of two images to enclose the AOI. Mosaicking two rasters with OBJECTID - 2360748 and 2365229 using predefined mosaic_by function.

landsat.mosaic_by(method="LockRaster",lock_rasters=[2360748,2365229])

mosaic = apply(landsat, 'Natural Color with DRA')

mosaic

We will clip the mosaicked raster using the boundary of Saharanpur which is our area of interest using clip function.

saharanpur_clip = clip(landsat, saharanpur_geom)

saharanpur_clip.extent = area

arcgis.env.analysis_extent = area

saharanpur_clip

Visualize the mosaic and training points on map

m = gis.map('Saharanpur, India')

m.add_layer(mosaic)

m.add_layer(saharanpur)

m.add_layer(data)

m.legend = True

m

Classification

With the Landsat layer and the training samples, we are now ready to train a support vector machine (SVM) model using train_classifier function.

classifier_definition = train_classifier(input_raster=saharanpur_clip,

input_training_sample_json=data.layers[0].query().to_json,

classifier_parameters={"method":"svm",

"params":{"maxSampleClass":100}},

gis=gis)Now we have the model, we are ready to apply the model to a landsat image of Saharanpur district to make prediction. This classifier will classify each pixel of the image into 5 different classes namely Urban, Forest, Agricultural, Water and Wasteland. We will use predefined classify function to perform the classification.

classified_output = classify(input_raster=saharanpur_clip,

input_classifier_definition=classifier_definition)Now let's visualize the classification result based on the colormap defined below.

cmap = colormap(classified_output.layers[0],

colormap=[[0, 255, 0, 0],

[1, 15, 242, 45],

[2, 255, 240, 10],

[3, 0, 0, 255],

[4, 176, 176, 167]])map2 = gis.map('Saharanpur, India')

map2.add_layer(cmap)

map2.legend = True

map2

Here, '0', '1', '2', '3' and '4' represents pixels from 'Urban', 'Forest', 'Agricultural', 'Water' and 'Wasteland' class respectively.

We can see that our model is performing reasonably well and is able to clearly identify the urban settlements while comparing the prediction with the Landsat image.

Mask settlements out of the classified raster

We have a classified raster which has each of its pixel classified against 5 different classes. But we require the pixels belonging to Urban class only, so we will mask all the pixels belonging to that class.

classified_output = gis.content.search('Classify_AS81ZD')[0]

classified_outputAs we are concerned only with detecting settlement pixels, we will mask out others from the classified raster by selecting the pixels with a class value of '0' which represents 'Urban' class.

masked = colormap(mask(classified_output.layers[0],

no_data_values =[],

included_ranges =[0,0]),

colormap=[[0, 255, 0, 0]],

astype='u8')

masked.extent = area

masked

We can save the masked raster containing the settlements to an imagery layer. This uses distributed raster analytics and performs the processing at the source pixel level and creates a new raster product.

masked = masked.save()We can now Convert the masked pixels to polygons using 'to_features' function.

urban_item = masked.layers[0].to_features(output_name='masked_polygons' + str(datetime.now().microsecond) ,

output_type='Polygon',

field='Value',

simplify=True,

gis=gis)

urban_layer = urban_item.layers[0]m2 = gis.map('Saharanpur, India')

m2.legend = True

m2.add_layer(urban_item)

m2

The settlement polygons have many false positives - mainly there are areas near river bed that are confused with settlements.

These false positives were removed by post processing using ArcGIS Pro. The result obtained after cleaning up the supervised classification are shown below, which are the final detected settlements.

settlement_poly = gis.content.search('settlement_polygons')[0]

settlement_polyDeep Learning

The deep learning approach for classification needs more training data. We will use the results that we obtained after cleaning up false positives from SVM predictions above, and exported training chips against each of those polygons using ArcGIS pro to train a deep learning model.

Export training data

Export training data using 'Export Training data for deep learning' tool, detailed documentation here

- Set Landsat imagery as 'Input Raster'.

- Set a location where you want to export the training data, it can be an existing folder or the tool will create that for you.

- Set the settlement training polygons as input to the 'Input Feature Class Or Classified Raster' parameter.

- In the option 'Input Mask Polygons' we can set a mask layer to limit the tool to export training data for only those areas which have buildings in it, we created one by generating a grid of 200m by 200m on Urban Polygons layer's extent and dissolving only those polygons which contained settlements to a single multipolygon feature.

- 'Tile Size X' & 'Tile Size Y' can be set to 224.

- Select 'Classified Tiles' as the 'Meta Data Format' because we are training an 'Unet Model'.

- In 'Environments' tab set an optimum 'Cell Size'. For this example, as we have performing the analysis on Landsat8 imagery, we used 30 cell size which meant 30m on a project coordinate system.

Prepare data

We would specify the path to our training data and a few hyper parameters.

path: path of folder containing training data.batch_size: No of images your model will train on each step inside an epoch, it directly depends on the memory of your graphic card. 8 worked for us on a 11GB GPU.imagery_type: It is a mandatory input to enable model for multispectral data processing. It can be "landsat8", "sentinel2", "naip", "ms" or "multispectral".

import os

from pathlib import Path

gis = GIS('home')training_data = gis.content.get('b9602a576cd54bc6a8e0279a290537af')

training_datafilepath = training_data.download(file_name=training_data.name)import zipfile

with zipfile.ZipFile(filepath, 'r') as zip_ref:

zip_ref.extractall(Path(filepath).parent)data_path = Path(os.path.join(filepath.split('.')[0]))# Prepare Data

data = prepare_data(data_path,

batch_size=8,

imagery_type='landsat8')For our model, we want out of the 11 bands in Landsat 8 image, weights for the RGB bands to be initialized using ImageNet weights whereas the other bands should have their weights initialized using the red band values. We can do this by setting the "type_init" parameter in the environment as "red band".

arcgis.env.type_init = 'red_band'Visualize training data

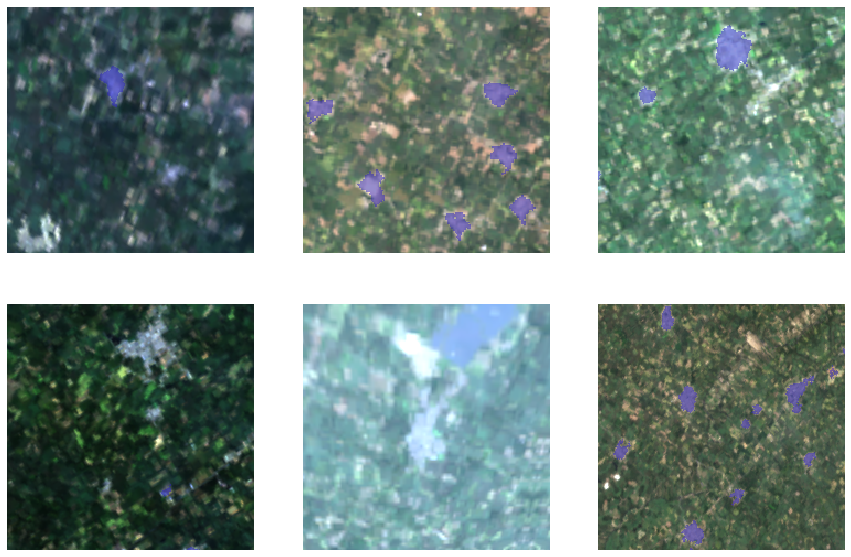

The code below shows a few samples of our data with the same symbology as in ArcGIS Pro.

rows: No. of rows we want to see the results for.alpha: controls the opacity of labels (Classified imagery) over the background imagerystatistics_type: for dynamic range adjustment

data.show_batch(rows=2, alpha=0.7, statistics_type='DRA')

Here, the light blue patches show the labelled settlements.

Load model architecture

We will be using U-net [4], one of the well recognized image segmentation algorithm, for our land cover classification. U-Net is designed like an auto-encoder. It has an encoding path (“contracting”) paired with a decoding path (“expanding”) which gives it the “U” shape. However, in contrast to the autoencoder, U-Net predicts a pixelwise segmentation map of the input image rather than classifying the input image as a whole. For each pixel in the original image, it asks the question: “To which class does this pixel belong?”. U-Net passes the feature maps from each level of the contracting path over to the analogous level in the expanding path. These are similar to residual connections in a ResNet type model, and allow the classifier to consider features at various scales and complexities to make its decision.

# Create Unet Model

model = UnetClassifier(data)Train the model

By default, the U-Net model uses ImageNet weights which are trained on 3 band imagery but we are using the multispectral support of ArcGIS API for Python to train the model for detecting settlements on 11 band Landsat 8 imagery, so unfreezing will make the backbone trainable so that it can learn to extract features from multispectral data.

Before training the model, let's unfreeze it to train it from scratch.

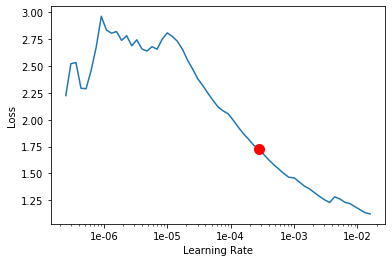

model.unfreeze()Learning rate is one of the most important hyperparameters in model training. Here, we explore a range of learning rate to guide us to choose the best one.

# Find Learning Rate

model.lr_find()

0.0002754228703338166

Based on the learning rate plot above, we can see that the learning rate suggested by lr_find() for our training data is 0.0002754228703338166. We can use it to train our model.

We would fit the model for 100 epochs. One epoch mean the model will see the complete training set once.

# Training

model.fit(epochs=100, lr=0.00022908676527677726);| epoch | train_loss | valid_loss | accuracy | time |

|---|---|---|---|---|

| 0 | 1.372514 | 0.501806 | 0.923853 | 00:17 |

| 1 | 0.785702 | 0.242798 | 0.924786 | 00:14 |

| 2 | 0.505967 | 0.173697 | 0.954149 | 00:14 |

| 3 | 0.365835 | 0.170369 | 0.960380 | 00:15 |

| 4 | 0.273482 | 0.156840 | 0.961279 | 00:15 |

| 5 | 0.217028 | 0.121626 | 0.959215 | 00:15 |

| 6 | 0.170534 | 0.096731 | 0.968844 | 00:15 |

| 7 | 0.140686 | 0.090505 | 0.972984 | 00:17 |

| 8 | 0.123734 | 0.089614 | 0.966222 | 00:17 |

| 9 | 0.109304 | 0.088085 | 0.966157 | 00:17 |

| 10 | 0.105920 | 0.073659 | 0.975945 | 00:17 |

| 11 | 0.093554 | 0.066235 | 0.974343 | 00:17 |

| 12 | 0.085037 | 0.065709 | 0.973596 | 00:17 |

| 13 | 0.089634 | 0.091828 | 0.973746 | 00:17 |

| 14 | 0.093221 | 0.094544 | 0.967697 | 00:17 |

| 15 | 0.099859 | 0.093586 | 0.965518 | 00:18 |

| 16 | 0.091674 | 0.056763 | 0.981256 | 00:16 |

| 17 | 0.087382 | 0.058476 | 0.977686 | 00:16 |

| 18 | 0.080962 | 0.051307 | 0.981285 | 00:16 |

| 19 | 0.076281 | 0.058740 | 0.976651 | 00:16 |

| 20 | 0.071987 | 0.051091 | 0.980849 | 00:17 |

| 21 | 0.076398 | 0.055713 | 0.978188 | 00:16 |

| 22 | 0.073460 | 0.054250 | 0.981253 | 00:16 |

| 23 | 0.073723 | 0.061181 | 0.976037 | 00:16 |

| 24 | 0.070814 | 0.050184 | 0.982208 | 00:16 |

| 25 | 0.068405 | 0.050781 | 0.982304 | 00:16 |

| 26 | 0.068676 | 0.052628 | 0.980515 | 00:17 |

| 27 | 0.068446 | 0.059368 | 0.979801 | 00:16 |

| 28 | 0.066732 | 0.052406 | 0.981607 | 00:16 |

| 29 | 0.069364 | 0.057514 | 0.981750 | 00:17 |

| 30 | 0.069280 | 0.094394 | 0.968493 | 00:16 |

| 31 | 0.071962 | 0.070343 | 0.973344 | 00:16 |

| 32 | 0.067915 | 0.053873 | 0.981427 | 00:16 |

| 33 | 0.068014 | 0.069022 | 0.978783 | 00:17 |

| 34 | 0.070144 | 0.053639 | 0.980159 | 00:17 |

| 35 | 0.068699 | 0.050212 | 0.981870 | 00:17 |

| 36 | 0.062960 | 0.048793 | 0.982042 | 00:16 |

| 37 | 0.059789 | 0.048675 | 0.981470 | 00:17 |

| 38 | 0.067027 | 0.054567 | 0.979936 | 00:17 |

| 39 | 0.067193 | 0.049769 | 0.982489 | 00:17 |

| 40 | 0.065255 | 0.053106 | 0.979787 | 00:17 |

| 41 | 0.064192 | 0.059477 | 0.977268 | 00:18 |

| 42 | 0.063501 | 0.054379 | 0.981351 | 00:18 |

| 43 | 0.061919 | 0.052928 | 0.980945 | 00:17 |

| 44 | 0.058338 | 0.049031 | 0.981407 | 00:17 |

| 45 | 0.058045 | 0.050386 | 0.983060 | 00:16 |

| 46 | 0.059159 | 0.050975 | 0.980992 | 00:16 |

| 47 | 0.057946 | 0.049633 | 0.981475 | 00:17 |

| 48 | 0.055635 | 0.046148 | 0.982935 | 00:17 |

| 49 | 0.054805 | 0.046591 | 0.982512 | 00:17 |

| 50 | 0.055399 | 0.048654 | 0.982924 | 00:18 |

| 51 | 0.061026 | 0.049713 | 0.982989 | 00:17 |

| 52 | 0.060342 | 0.060345 | 0.982419 | 00:17 |

| 53 | 0.057911 | 0.047378 | 0.982254 | 00:17 |

| 54 | 0.057208 | 0.045288 | 0.983346 | 00:17 |

| 55 | 0.056603 | 0.053126 | 0.980212 | 00:16 |

| 56 | 0.055458 | 0.047008 | 0.983352 | 00:16 |

| 57 | 0.055016 | 0.047503 | 0.983466 | 00:16 |

| 58 | 0.055864 | 0.046239 | 0.983545 | 00:16 |

| 59 | 0.053660 | 0.044220 | 0.983625 | 00:16 |

| 60 | 0.053831 | 0.050546 | 0.980321 | 00:16 |

| 61 | 0.053539 | 0.046488 | 0.983567 | 00:16 |

| 62 | 0.052327 | 0.044533 | 0.983854 | 00:16 |

| 63 | 0.052148 | 0.045945 | 0.982770 | 00:16 |

| 64 | 0.053229 | 0.045045 | 0.983302 | 00:17 |

| 65 | 0.054815 | 0.050580 | 0.981261 | 00:17 |

| 66 | 0.054848 | 0.049836 | 0.981004 | 00:17 |

| 67 | 0.053770 | 0.045560 | 0.983329 | 00:17 |

| 68 | 0.051000 | 0.044023 | 0.983567 | 00:17 |

| 69 | 0.050662 | 0.044535 | 0.983679 | 00:16 |

| 70 | 0.050817 | 0.043398 | 0.983903 | 00:17 |

| 71 | 0.050461 | 0.042922 | 0.984024 | 00:17 |

| 72 | 0.051408 | 0.044830 | 0.983668 | 00:17 |

| 73 | 0.049905 | 0.047697 | 0.982204 | 00:16 |

| 74 | 0.050102 | 0.045514 | 0.983538 | 00:17 |

| 75 | 0.049953 | 0.047218 | 0.982307 | 00:16 |

| 76 | 0.049459 | 0.045315 | 0.983307 | 00:16 |

| 77 | 0.048964 | 0.043759 | 0.983878 | 00:16 |

| 78 | 0.048636 | 0.044720 | 0.983425 | 00:16 |

| 79 | 0.047818 | 0.044903 | 0.983352 | 00:16 |

| 80 | 0.047709 | 0.045542 | 0.983003 | 00:16 |

| 81 | 0.048966 | 0.045046 | 0.983374 | 00:17 |

| 82 | 0.047563 | 0.046367 | 0.982537 | 00:17 |

| 83 | 0.048292 | 0.044036 | 0.983857 | 00:16 |

| 84 | 0.047185 | 0.044230 | 0.983849 | 00:16 |

| 85 | 0.045970 | 0.043959 | 0.983448 | 00:17 |

| 86 | 0.047250 | 0.043483 | 0.984002 | 00:17 |

| 87 | 0.047400 | 0.044048 | 0.983784 | 00:17 |

| 88 | 0.047004 | 0.043771 | 0.983819 | 00:17 |

| 89 | 0.048961 | 0.044420 | 0.983454 | 00:17 |

| 90 | 0.048994 | 0.044171 | 0.983506 | 00:17 |

| 91 | 0.048643 | 0.044221 | 0.983580 | 00:17 |

| 92 | 0.048048 | 0.044252 | 0.983661 | 00:16 |

| 93 | 0.046049 | 0.043951 | 0.983676 | 00:17 |

| 94 | 0.046517 | 0.043798 | 0.983755 | 00:17 |

| 95 | 0.045587 | 0.043757 | 0.983717 | 00:16 |

| 96 | 0.047089 | 0.043693 | 0.983742 | 00:17 |

| 97 | 0.045898 | 0.043710 | 0.983840 | 00:17 |

| 98 | 0.044707 | 0.043701 | 0.983827 | 00:16 |

| 99 | 0.044915 | 0.043823 | 0.983859 | 00:17 |

model.mIOU(){'0': 0.9821453002296315, '1': 0.7511354937467614}Here, we can see loss on both training and validation set decreasing, which shows how well the model generalizes on unseen data thereby preventing overfitting. Also, as we can see our model here is able to classify pixels to settlement or non settlement with an accuracy 0.98. Once we are satisfied with the accuracy, we can save the model.

Save the model

We would now save the model which we just trained as a 'Deep Learning Package' or '.dlpk' format. Deep Learning package is the standard format used to deploy deep learning models on the ArcGIS platform. UnetClassifier models can be deployed by the tool 'Classify Pixels Using Deep Learning' available in ArcGIS Pro as well as ArcGIS Enterprise. For this sample, we will be using this model in ArcGIS Pro to extract settlements.

We will use the save() method to save the model and by default it will be saved to a folder 'models' inside our training data folder itself.

model.save('100e')Load an intermediate model to train it further

If we need to further train an already saved model we can load it again using the code below and repeat the earlier mentioned steps of training.

# Load Model from previous saved files

# model.load('100e')Preview results

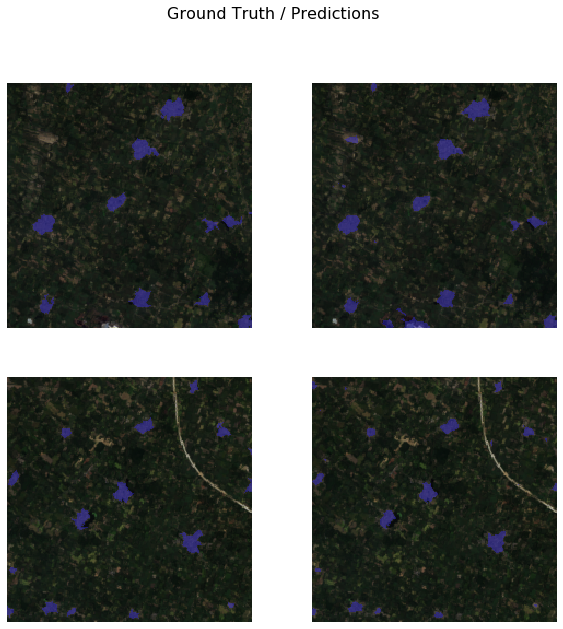

The code below will pick a few random samples and show us ground truth and respective model predictions side by side. This allows us to validate the results of your model in the notebook itself. Once satisfied we can save the model and use it further in our workflow.

model.show_results(rows=2)

Deploy model and extract settlements

The model saved in previous step can be used to extract classified raster using 'Classify Pixels Using Deep Learning' tool. Further the classified raster is regularised and finally converted to a vector Polygon layer. The regularisation step uses advanced ArcGIS geoprocessing tools to remove unwanted artifacts in the output.

Generate a classified raster

In this step, we will generate a classified raster using 'Classify Pixels Using Deep Learning' tool available in both ArcGIS Pro and ArcGIS Enterprise.

Input Raster: The raster layer from which we want to extract settlements from.Model Definition: It will be located inside the saved model in 'models' folder in '.emd' format.Padding: The 'Input Raster' is tiled and the deep learning model classifies each individual tile separately before producing the final 'Output Classified Raster'. This may lead to unwanted artifacts along the edges of each tile as the model has little context to predict accurately. Padding as the name suggests allows us to supply some extra information along the tile edges, this helps the model to predict better.Cell Size: Should be close to at which we trained the model, we specified that at the Export training data step .Processor Type: This allows to control whether the system's 'GPU' or 'CPU' would be used in to classify pixels, by 'default GPU' will be used if available.Parallel Processing Factor: This allows us to scale this tool, this tool can be scaled on both 'CPU' and 'GPU'. It specifies that the operation would be spread across how many 'cpu cores' in case of cpu based operation or 'no of GPU's' in case of GPU based operation.

Output of this tool will be in form of a 'classified raster' containing both background and Settlements. The pixels having background class are shown in green color and pixels belonging to settlement class are in purple color.

A subset of preditions by our Model

Change the symbology of the raster by setting the second class of the classified pixels to 'No color'.

After this step, we will get a masked raster which will look like the screenshot below.

We can now run 'Raster to Polygon' tool in ArcGIS Pro to convert this masked raster to polygons.

Results obtained from Deep Learning approach are ready to be analyzed.

Deep Learning Results

Conclusion

This notebook shows how traditional algorithms like Support Vector Machines can be used to generate the necessary quality data for Deep Learning models which after cleaning can serve as the training data required by a DL model for generating accurate results at a much larger extent.

References

[3] https://developers.arcgis.com/python/sample-notebooks/land-cover-classification-using-unet/

[4] Olaf Ronneberger, Philipp Fischer, Thomas Brox: U-Net: Convolutional Networks for Biomedical Image Segmentation, 2015; arXiv:1505.04597.