A comma-separated values (CSV) file (.csv) is a type of

delimited text file that uses commas or other characters to separate fields. CSV data can contain spatial data as

numbers (e.g., longitude and latitude), as text (e.g., Well-Known Text (WKT), GeoJSON, or EsriJSON), or as encoded binary values.

After you load one or more CSV files as a Spark DataFrame, you can create a geometry column and define its spatial reference using GeoAnalytics for Microsoft Fabric SQL functions. For example, if you had polygons stored as WKT strings you could call ST_PointFromText to create a point column from a string column and set its spatial reference. For more information see Geometry.

After creating the geometry column and defining its spatial reference, you can perform spatial analysis and visualization using the SQL functions and tools available in GeoAnalytics for Microsoft Fabric. You can also export a Spark DataFrame to CSV files for data storage or export to other systems.

Reading CSV Files in Spark

The following examples demonstrate how to load CSV files into Spark DataFrames using both Python and Scala.

# Option 1

df = spark.read.option("header", "true").csv("path/to/your/file.csv")

# Option 2

df = spark.read.format("csv").option("header", "true").load("path/to/your/file.csv")Note that the option("header", "true") ensures the first row is used as column headers.

Options for reading CSV files

Here are some common options used in reading CSV files in Spark:

| DataFrameReader option | Example | Description |

|---|---|---|

header | .option("header", True) | Interpret the first row of the CSV files as header. |

infer | .option("infer | Infer the column schema when reading CSV files. Spark will by default infer all columns as string columns. |

delimiter | .option("delimiter", ";") | Read CSV files with the specified delimiter. The default delimiter is ",". |

For a complete list of options that can be used with the CSV data source in Spark, see the Spark documentation.

Specifying a Schema

To define the schema explicitly, you can use Struct and Struct in both PySpark and Scala.

from pyspark.sql.types import StructType, StructField, StringType, IntegerType

schema = StructType([

StructField("name", StringType(), True),

StructField("age", IntegerType(), True),

StructField("city", StringType(), True)

])

df = spark.read.option("header", "true") \

.schema(schema) \

.csv("path/to/your/file.csv")Reading multiple CSV Files

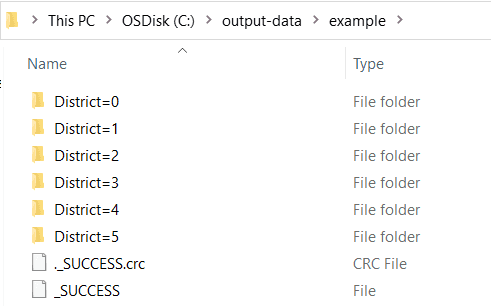

Spark can infer partition columns from directory names that follow the column=value format. For instance, if your CSV

files are organized in subdirectories named District=0, District=2, etc., Spark will recognize district as a

partition column when reading the data. This allows Spark to optimize query performance by reading only the relevant partitions.

When reading a directory of CSV data with subdirectories not named with column=, Spark won't read from the

subdirectories in bulk. You must add the glob pattern at the end of the root path.

Note that all subdirectories must contain CSV files in order to be read in bulk.

df = spark.read.option("header", "true").csv("path/to/your/csv/files/*.csv")Writing DataFrames to CSV

# Option 1

df.write.option("header", "true").csv("path/to/output/directory")

# Option 2

df.write.format("csv").option("header", "true").save("path/to/output/directory")Here are some common options used in writing CSV files in Spark:

| DataFrameWriter option | Example | Description |

|---|---|---|

partition | .partition | Partition the output by the given column name. This example will partition the output CSV files by values in the date column. |

overwrite | .mode("overwrite") | Overwrite existing data in the specified path. Other available options are append, error, and ignore. |

header | .option("header", True) | Export the Spark DataFrame with a header row. |

Usage notes

- The CSV data source doesn't support loading or saving

point,line,polygon, orgeometrycolumns. - The spatial reference of a geometry column always needs to be set when importing geometry data from CSV files.

- Consider explicitly saving the spatial reference in the CSV as a column or in the schema in the geometry column name. To read more on the best practices of working with spatial references in DataFrames, see the documentation on Coordinate Systems and Transformations.

- Be careful when saving to one CSV file using

.coalesce(1)with large datasets. Consider partitioning large data by a certain attribute column to easily read and filter subdirectories of data and to improve performance.