ST_SquareBins takes a geometry column and a numeric bin size and returns an array column. The result array contains square bins that cover the spatial extent of each record in the input column. The specified bin size determines the height of each bin and is in the same units as the input geometry. You can optionally specify a numeric value for padding, which conceptually applies a buffer of the specified distance to the input geometry before creating the square bins.

| Function | Syntax |

|---|---|

| Python | square |

| SQL | ST |

| Scala | square |

For more details, go to the GeoAnalytics for Microsoft Fabric API reference for square_bins.

Python and SQL Examples

from geoanalytics_fabric.sql import functions as ST, Polygon

from pyspark.sql import functions as F

data = [

(Polygon([[[2,2],[2,12],[12,12],[12,2]]]),),

(Polygon([[[20,0],[30,20],[40,0]]]),),

(Polygon([[[20,30],[25,35],[30,30]]]),)

]

df = spark.createDataFrame(data,["polygon"])

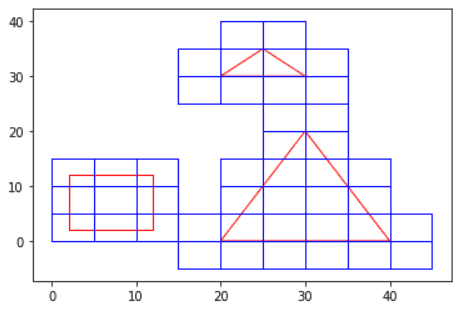

bins = df.select(ST.square_bins("polygon", 5, 2).alias("bins"))

bin_geometries = bins.select(F.explode("bins").alias("bin")).select(ST.bin_geometry("bin"))

ax = df.st.plot("polygon", facecolor="none", edgecolor="red")

bin_geometries.st.plot(ax=ax, facecolor="none", edgecolor="blue")

Scala Example

import com.esri.geoanalytics.geometry._

import com.esri.geoanalytics.sql.{functions => ST}

case class PolygonRow(polygon: Polygon)

val data = Seq(PolygonRow(Polygon(Point(2, 2), Point(2, 12), Point(12, 12), Point(12, 2))),

PolygonRow(Polygon(Point(20, 0), Point(30, 20), Point(40, 0))),

PolygonRow(Polygon(Point(20, 30), Point(25, 35), Point(30, 30))))

val df = spark.createDataFrame(data)

.select(ST.squareBins($"polygon", 5, 2).alias("bins"))

df.select("bins").show()+--------------------+

| bins|

+--------------------+

|[bin#0, bin#1, bi...|

|[bin#17179869183,...|

|[bin#12884901893,...|

+--------------------+Version table

| Release | Notes |

|---|---|

1.0.0-beta | Python, SQL, and Scala functions introduced |