TRK_SplitByDistanceGap takes a track column and a distance and returns an array of tracks. The result array contains the input track split into segments wherever two vertices are farther apart than the specified distance. The track is split by removing the segment between the two vertices.

When specified with a unit, the distance can be defined using ST_CreateDistance

or with a tuple containing a number and a unit string (e.g., (10, "kilometers")).

Tracks are linestrings that represent the change in an entity's location over time. Each vertex in the linestring has a timestamp (stored as the M-value) and the vertices are ordered sequentially.

For more information on using tracks in GeoAnalytics for Microsoft Fabric, see the core concept topic on tracks.

| Function | Syntax |

|---|---|

| Python | split |

| SQL | TRK |

| Scala | split |

For more details, go to the GeoAnalytics for Microsoft Fabric API reference for split_by_distance_gap.

Python and SQL Examples

from geoanalytics_fabric.sql import functions as ST

from geoanalytics_fabric.tracks import functions as TRK

from pyspark.sql import functions as F

data = [

("LINESTRING M (-117.27 34.05 1633455010, -117.22 33.91 1633456062," +\

" -116.96 33.64 1633457132, -116.85 33.81 1633457224)",),

("LINESTRING M (-116.89 33.96 1633575895, -116.71 34.01 1633576982, -116.66 34.08 1633577061)",),

("LINESTRING M (-116.24 33.88 1633575234, -116.33 34.02 1633576336)",)

]

df = spark.createDataFrame(data, ["wkt"]).withColumn("track", ST.line_from_text("wkt", srid=4326))

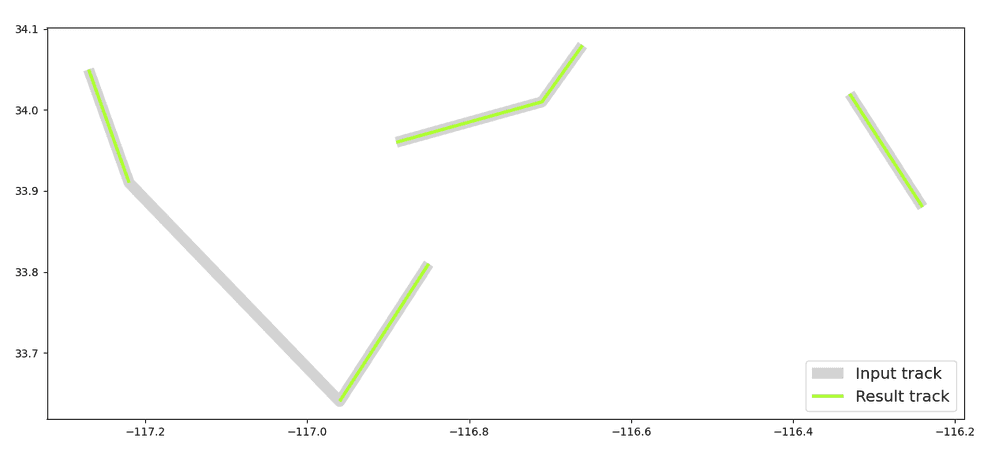

result = df.withColumn("split_by_distance_gap", TRK.split_by_distance_gap("track", (15, "miles")))

ax = result.st.plot("track", edgecolor="lightgrey", linewidths=10, figsize=(15, 8))

result.select(F.explode("split_by_distance_gap")).st.plot(ax=ax, edgecolor="greenyellow", linewidths=3)

ax.legend(['Input track','Result track'], loc='lower right', fontsize='x-large')

Scala Example

import com.esri.geoanalytics.sql.{functions => ST}

import com.esri.geoanalytics.sql.{trackFunctions => TRK}

import org.apache.spark.sql.{functions => F}

case class lineRow(lineWkt: String)

val data = Seq(lineRow("LINESTRING M (-117.27 34.05 1633455010, -117.22 33.91 1633456062, -116.96 33.64 1633457132)"),

lineRow("LINESTRING M (-116.89 33.96 1633575895, -116.71 34.01 1633576982, -116.66 34.08 1633577061)"),

lineRow("LINESTRING M (-116.24 33.88 1633575234, -116.33 34.02 1633576336)"))

val df = spark.createDataFrame(data)

.withColumn("track", ST.lineFromText($"lineWkt", F.lit(4326)))

.withColumn("split_by_distance_gap", TRK.splitByDistanceGap($"track", F.lit(F.struct(F.lit(15), F.lit("miles")))))

.withColumn("result_tracks", F.explode($"split_by_distance_gap"))

df.select("result_tracks").show(truncate = false)+-------------------------------------------------------------------------------------------------------------------+

|result_tracks |

+-------------------------------------------------------------------------------------------------------------------+

|{"hasM":true,"paths":[[[-117.27,34.05,1.63345501e9],[-117.22,33.91,1.633456062e9]]]} |

|{"hasM":true,"paths":[[[-116.96,33.64,1.633457132e9]]]} |

|{"hasM":true,"paths":[[[-116.89,33.96,1.633575895e9],[-116.71,34.01,1.633576982e9],[-116.66,34.08,1.633577061e9]]]}|

|{"hasM":true,"paths":[[[-116.24,33.88,1.633575234e9],[-116.33,34.02,1.633576336e9]]]} |

+-------------------------------------------------------------------------------------------------------------------+Version table

| Release | Notes |

|---|---|

1.0.0-beta | Python, SQL, and Scala functions introduced |