- 🔬 Data Science

- 🥠 Deep Learning and Pixel Classification

Introduction

In this notebook, we will use 3 different geo-morphological characteristics derived from a 5 m resolution DEM in one of the watersheds of Alaska to extract streams. These 3 characteristics are Topographic position index derived from 3 cells, Geomorphon landform, and topographic wetness index.

We created a composite out of these 3 characteristic rasters and used Export raster tool to convert scale the pixels values to 8 bit unsigned. Subsequently, the images are exported as "Classified Tiles" to train a Multi-Task Road Extractor model provided by ArcGIS API for Python for extracting the streams.

Before proceeding through this notebook, it is advised to go through the API Reference for Multi-Task Road Extractor. It will help in understanding the Multi-Task Road Extractor's workflow in detail.

Necessary imports

import os

import zipfile

from pathlib import Path

from arcgis.gis import GIS

from arcgis.learn import prepare_data, MultiTaskRoadExtractor, ConnectNetConnect to your GIS

gis = GIS("home")

ent_gis = GIS('https://pythonapi.playground.esri.com/portal', 'arcgis_python', 'amazing_arcgis_123')Get the data for analysis

Here is the composite with 3 bands representing the 3 geo-morphological characteristics namely Topographic position index, Geomorphon landform, and Topographic wetness index.

composite_raster = ent_gis.content.get('43c4824dd4bf41ee886be5042262f192')

composite_rasterBeaverCreek_Flowlines = ent_gis.content.get('2b198075e53748b4ab84197bc6ddd478')

BeaverCreek_FlowlinesExport training data

Export training data using 'Export Training data for deep learning' tool, click here for detailed documentation:

- Set 'composite_3bands_6BC_8BC_8bitunsigned' as

Input Raster. - Set a location where you want to export the training data in

Output Folderparameter, it can be an existing folder or the tool will create that for you. - Set the 'BeaverCreek_Flowlines' as input to the

Input Feature Class Or Classified Rasterparameter. - Set

Class Field Valueas 'FClass'. - Set

Image Formatas 'TIFF format' Tile Size X&Tile Size Ycan be set to 256.Stride X&Stride Ycan be set to 128.- Select 'Classified Tiles' as the

Meta Data Format. - In 'Environments' tab set an optimum

Cell Size. For this example, as we have performing the analysis on the geo-morphological characteristics with 5 m resolution, so, we used '5' as the cell size.

arcpy.ia.ExportTrainingDataForDeepLearning("composite_3bands_6BC_8BC_8bitunsigned.tif", r"D:\Stream Extraction\Exported_3bands_composite_8bit_unsigned", "BeaverCreek_Flowlines", "TIFF", 256, 256, 128, 128, "ONLY_TILES_WITH_FEATURES", "Classified_Tiles", 0, "FClass", 5, None, 0, "MAP_SPACE", "PROCESS_AS_MOSAICKED_IMAGE", "NO_BLACKEN", "FIXED_SIZE")

Alternatively, we have provided a subset of training data containing a few samples. You can use the data directly to run the experiments.

training_data = gis.content.get('3a95fd7a25d54898bddabf1989eea87d')

training_datafilepath = training_data.download(file_name=training_data.name)with zipfile.ZipFile(filepath, 'r') as zip_ref:

zip_ref.extractall(Path(filepath).parent)output_path = Path(os.path.join(os.path.splitext(filepath)[0]))Prepare data

We will specify the path to our training data and a few hyperparameters.

path: path of the folder containing training data.batch_size: Number of images your model will train on each step inside an epoch, it directly depends on the memory of your graphic card. 8 worked for us on a 11GB GPU.

data = prepare_data(output_path, chip_size=512, batch_size=4)Visualize a few samples from your training data

To get a sense of what the training data looks like, arcgis.learn.show_batch() method randomly picks a few training chips and visualizes them.

rows: number of rows we want to see the results for.

data.show_batch()

Train the model

Load model architecture

arcgis.learn provides the MultiTaskRoadExtractor model for classifying linear features, which is based on multi-task learning mechanism. More details about multi-task learning can be found here.

model = ConnectNet(data, mtl_model="hourglass")Find an optimal learning rate

Learning rate is one of the most important hyperparameters in model training. Here, we explore a range of learning rate to guide us to choose the best one. We will use the lr_find() method to find an optimum learning rate at which we can train a robust model.

lr=model.lr_find()

Fit the model

To train the model, we use the fit() method. To start, we will train our model for 50 epochs. Epoch defines how many times model is exposed to entire training set. We have passes three parameters to fit() method:

epochs: Number of cycles of training on the data.lr: Learning rate to be used for training the model.wd: Weight decay to be used.

model.fit(50, lr)| epoch | train_loss | valid_loss | accuracy | miou | dice | time |

|---|---|---|---|---|---|---|

| 0 | 0.558766 | 0.534709 | 0.922076 | 0.752023 | 0.691977 | 06:12 |

| 1 | 0.498751 | 0.492464 | 0.924021 | 0.753733 | 0.688887 | 06:14 |

| 2 | 0.437932 | 0.436872 | 0.927699 | 0.774262 | 0.728609 | 06:15 |

| 3 | 0.426738 | 0.417698 | 0.929065 | 0.780704 | 0.742425 | 06:16 |

| 4 | 0.397952 | 0.402251 | 0.932954 | 0.791682 | 0.756385 | 06:22 |

| 5 | 0.384675 | 0.417134 | 0.929547 | 0.769949 | 0.713407 | 06:16 |

| 6 | 0.343937 | 0.396817 | 0.936183 | 0.799815 | 0.762606 | 06:14 |

| 7 | 0.303077 | 0.360951 | 0.943892 | 0.823877 | 0.797331 | 06:13 |

| 8 | 0.273635 | 0.346368 | 0.945677 | 0.832608 | 0.816316 | 06:12 |

| 9 | 0.236909 | 0.358429 | 0.947444 | 0.834744 | 0.814564 | 06:11 |

| 10 | 0.210300 | 0.294313 | 0.960634 | 0.871112 | 0.853038 | 06:11 |

| 11 | 0.196187 | 0.276327 | 0.964296 | 0.881324 | 0.861914 | 06:10 |

| 12 | 0.170332 | 0.262838 | 0.968050 | 0.890794 | 0.866844 | 06:17 |

| 13 | 0.154012 | 0.231741 | 0.973662 | 0.910223 | 0.892401 | 06:08 |

| 14 | 0.145626 | 0.281105 | 0.965028 | 0.879789 | 0.854570 | 06:09 |

| 15 | 0.128396 | 0.203311 | 0.977936 | 0.923766 | 0.911519 | 06:10 |

| 16 | 0.120995 | 0.197292 | 0.979487 | 0.928456 | 0.912996 | 06:10 |

| 17 | 0.113916 | 0.199937 | 0.979369 | 0.928337 | 0.912269 | 06:10 |

| 18 | 0.104517 | 0.171265 | 0.983606 | 0.943175 | 0.937693 | 06:09 |

| 19 | 0.090587 | 0.170476 | 0.985622 | 0.949636 | 0.941140 | 06:10 |

| 20 | 0.087183 | 0.183974 | 0.982769 | 0.939793 | 0.930118 | 06:10 |

| 21 | 0.085135 | 0.164984 | 0.986016 | 0.951064 | 0.942980 | 06:10 |

| 22 | 0.076413 | 0.155505 | 0.986802 | 0.954121 | 0.949896 | 06:09 |

| 23 | 0.069336 | 0.168183 | 0.984552 | 0.945256 | 0.938607 | 06:09 |

| 24 | 0.067083 | 0.204512 | 0.980071 | 0.931827 | 0.921949 | 06:10 |

| 25 | 0.067790 | 0.181579 | 0.983572 | 0.943036 | 0.931991 | 06:08 |

| 26 | 0.057953 | 0.144368 | 0.990169 | 0.965332 | 0.963942 | 06:11 |

| 27 | 0.058147 | 0.144659 | 0.987878 | 0.957173 | 0.944653 | 06:10 |

| 28 | 0.049824 | 0.133009 | 0.991482 | 0.969918 | 0.968755 | 06:09 |

| 29 | 0.044723 | 0.141182 | 0.991226 | 0.969011 | 0.962455 | 06:10 |

| 30 | 0.042276 | 0.130206 | 0.992101 | 0.971694 | 0.966720 | 06:09 |

| 31 | 0.039241 | 0.137659 | 0.992248 | 0.972494 | 0.967590 | 06:10 |

| 32 | 0.037055 | 0.147308 | 0.992527 | 0.973441 | 0.971456 | 06:11 |

| 33 | 0.034351 | 0.132264 | 0.992971 | 0.975372 | 0.974691 | 06:07 |

| 34 | 0.030599 | 0.137769 | 0.993346 | 0.976576 | 0.975872 | 06:10 |

| 35 | 0.029566 | 0.141984 | 0.993901 | 0.978461 | 0.974390 | 06:09 |

| 36 | 0.026460 | 0.142756 | 0.994096 | 0.979001 | 0.974653 | 06:10 |

| 37 | 0.022454 | 0.150379 | 0.994556 | 0.980506 | 0.976769 | 06:10 |

| 38 | 0.020450 | 0.154217 | 0.994858 | 0.981657 | 0.977751 | 06:09 |

| 39 | 0.018612 | 0.160242 | 0.994938 | 0.981997 | 0.979882 | 06:10 |

| 40 | 0.016824 | 0.168730 | 0.994849 | 0.981717 | 0.978272 | 06:09 |

| 41 | 0.015702 | 0.178581 | 0.995122 | 0.982692 | 0.980355 | 06:13 |

| 42 | 0.014045 | 0.192932 | 0.995007 | 0.982301 | 0.979872 | 06:11 |

| 43 | 0.012625 | 0.200434 | 0.995330 | 0.983468 | 0.981752 | 06:08 |

| 44 | 0.011161 | 0.210173 | 0.995546 | 0.984242 | 0.982513 | 06:09 |

| 45 | 0.010115 | 0.220612 | 0.995600 | 0.984434 | 0.982565 | 06:09 |

| 46 | 0.009399 | 0.230511 | 0.995631 | 0.984542 | 0.982554 | 06:10 |

| 47 | 0.008947 | 0.236833 | 0.995650 | 0.984608 | 0.982620 | 06:08 |

| 48 | 0.008720 | 0.239371 | 0.995674 | 0.984685 | 0.982757 | 06:09 |

| 49 | 0.008618 | 0.239266 | 0.995696 | 0.984761 | 0.982801 | 06:09 |

As you can see, both the losses (valid_loss and train_loss) started from a higher value and ended up to a lower value, that tells our model has learnt well. Let us do an accuracy assessment to validate our observation.

Accuracy Assessment

We can compute the mIOU (Mean Intersection Over Union) for the model we just trained in order to do the accuracy assessment. We can compute the mIOU by calling model.mIOU. It takes the following parameters:

mean: If False returns class-wise mean IOU, otherwise returns mean IOU of all classes combined.

model.mIOU(){'0': 0.9947645938447383, 1: 0.9746375491545367}The model has a mean IOU of 0.97 which proves that the model has learnt well. Let us now see it's results on validation set.

Visualize results in validation set

The code below will pick a few random samples and show us ground truth and respective model predictions side by side. This allows us to validate the results of your model in the notebook itself. Once satisfied we can save the model and use it further in our workflow. The model.show_results() method can be used to display the detected streams.

model.show_results(rows=4)

Save the model

We would now save the model which we just trained as a 'Deep Learning Package' or '.dlpk' format. Deep Learning package is the standard format used to deploy deep learning models on the ArcGIS platform.

We will use the save() method to save the model and by default it will be saved to a folder 'models' inside our training data folder itself.

model.save('stream_ext_50e', overwrite=True, publish=True, gis=gis)Published DLPK Item Id: 2a8b0ca302ca42d9a979b5e28bebc6d7

WindowsPath('D:/data/stream_ext_50e')Model inference

The saved model can be used to classify streams using the Classify Pixels Using Deep Learning tool available in ArcGIS Pro, or ArcGIS Enterprise.

arcpy.ia.ClassifyPixelsUsingDeepLearning("composite_3bands_6BC_8BC_8bitunsigned.tif", r"D:/stream_ext_20e/stream_ext_20e.dlpk", "padding 64;batch_size 4;predict_background True;return_probability_raster False;threshold 0.5", "PROCESS_AS_MOSAICKED_IMAGE", None); out_classified_raster.save(r"\Documents\ArcGIS\Packages\Stream Extraction demo_9ee52d\p20\stream_identification.gdb\detected_streams")

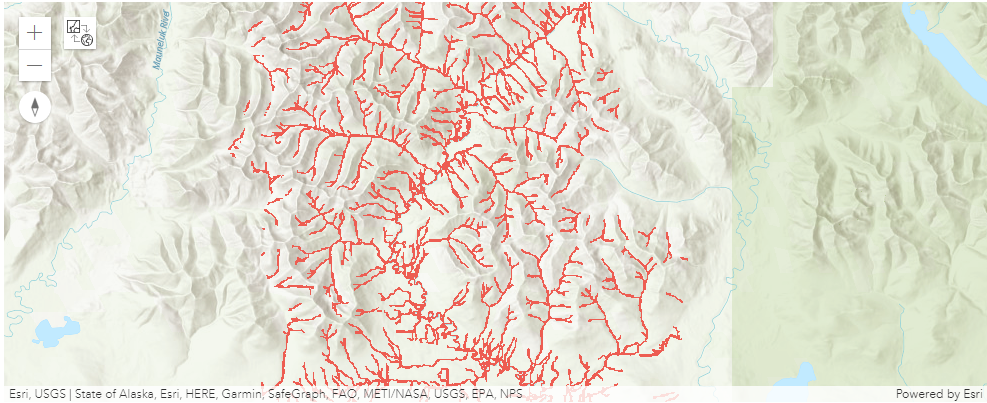

The output of the model is a layer of detected streams which is shown below:

extracted_streams = ent_gis.content.get('389772c5dbb745b79953dc7ee0dc5876')

extracted_streamsmap1 = gis.map()

map1.add_layer(extracted_streams)

map1

map1.zoom_to_layer(extracted_streams)Conclusion

The notebook presents the workflow showing how easily you can combine traditional morphological landform characteristics with deep learning methodologies using arcgis.learn to detect streams.